SQL Interview Questions Part 2

kindly see the SQL Interview Questions Part 1 as my below link

http://sqltechi.blogspot.in/2012/02/interview-questions-in-sql-2005-and.html

1)If you run DBCC CHECKDB what runs in background?

Checks the logical and physical integrity of all the objects in the specified database by performing the following operations:

DBCC CHECKDB is supported on databases that contain memory-optimized tables but validation only occurs on disk-based tables

Step 2

4)Which DMV can be used to check how many dirty pages exists in the memory for each database?

1.Distribution Server Jobs:

1. Agent history clean up: distribution

2. Distribution clean up: distribution

3. Expired subscription clean up

4. Reinitialize subscriptions having data validation failures

5. Replication agents checkup

6. Replication monitoring refresher for distribution.

7. S1\SUBSCRIBER-AdventureWorks-Publisher1-S1\SUBSCRIBER-3

1. P1\PUBLISHER-AdventureWorks-1

2. P1\PUBLISHER-AdventureWorks-Publisher1-1

3. Subscriber Server Jobs.

The main difference is that a 32-bit app can never address more than 4 GB of memory,

while a 64-bit app can address memory up to 16 exabytes or so.

Snapshot

Serializable (High)

Serializable isolation is implemented b acquiring locks which means the resources are locked for the duration of the current transaction. This isolation level does not have any concurrency side effects but at the cost of significant reduction in concurrency

Snapshot isolation does not acquire locks it maintains versioning in tempdb since snapshot isolation does not lock resources, it can significantly increase the number of concurrent transactions while providing the same level of data consistency as serializable isolation does

if the first transaction is rolled back after the second reads the data the second transaction has dirty data that does not exists any more

Phantom Reads

Phantom Read happens when one transaction executes a query twice and get a different number of rows in the result set each time. This happens when a second transaction inserts a new row that matches the where clause of the query execute by the first transaction

Transaction 1

select * from emp where id between 1 and 3 (2 rows returned)

do some other work/wait--Wait for Delay '00:00:10'

select * from emp where id between 1 and 3 (3 rows returned)

Transaction 2

insert a new emp record at the time of Transaction do some other work/wait

To resolve this we can issue serializable isolation level at transaction 1

this will place range lock between 1 and 3 it prevents any other transaction from inserting new rows with in that range

Buffer pool extension up to SSD (Solid State Disk)

provide the best performance gains for read-heavy OLTP workloads

Increases random I/O Processes with reduced Latency

Only clean pages are written to BPE

Wait type used for BPE I/O to completed is EC

Significantly slow down performance for large serial range scans

SQL Server 2014 also provides a new solid state disk (SSD) integration capability that enables you to use SSD storage to expand SQL Server 2014's buffer pool. The new buffer pool enhancements can help increase performance in systems that have maxed out their memory capability by using high-speed nonvolatile RAM (N V RAM) in the SSD drives as an extension to SQL Server 2014's standard buffer pool. The new buffer pool extensions can provide the best performance gains for read-heavy OLTP workloads

Difference between Merge Replication, Bidirectional Transactional Replication, or Peer-to-Peer Replication

Collation is basically the sort order. There are three types of sort order Dictionary case sensitive, Dictionary - case insensitive and Binary.

What is Microsoft SQL Server Error number Msg 3702

The following commands will not raise an error and successfully drop the database:

5022 is Database mirroring

How can you prepare and how should you apply service packs or hotfixes on the Active/Passive SQL Server cluster?

If

Model DB corrupted, what is the status of SQL Server Instance

In the startup process, once SQL has opened and recovered the master database it needs to bring the other system databases online, starting with TempDB. However, SQL Server cannot simply locate and open the TempDB files and run crash recovery, as it does for the other system databases. TempDB is not like the other databases in the instance in that it's not intended to be a permanent store of data. As such, logging works differently for TempDB; for this database, SQL Server cannot roll transactions forward, which is part of a normal crash recovery. Therefore, instead of simply recovering TempDB on startup, SQL Server must return it to a known state, and the model database provides that known state

what is prerequisite for Cluster and AlwaysON

https://sqltechi.blogspot.in/2017/08/step-by-step-configure-always-on.html

kindly see the SQL Interview Questions Part 1 as my below link

http://sqltechi.blogspot.in/2012/02/interview-questions-in-sql-2005-and.html

1)If you run DBCC CHECKDB what runs in background?

Checks the logical and physical integrity of all the objects in the specified database by performing the following operations:

DBCC CHECKDB is supported on databases that contain memory-optimized tables but validation only occurs on disk-based tables

- Runs DBCC CHECKALLOC on the database.

- Runs DBCC CHECKTABLE on every table and view in the database.

- Runs DBCC CHECKCATALOG on the database.

- Validates the contents of every indexed view in the database.

- Validates link-level consistency between table metadata and file system directories and files when storing varbinary(max) data in the file system using FILESTREAM.

- Validates the Service Broker data in the database.This means that the DBCC CHECKALLOC, DBCC CHECKTABLE, or DBCC CHECKCATALOG commands do not have to be run separately from DBCC CHECKDB

Sample output

DBCC results for 'model'.

Service Broker Msg 9675, Level 10, State 1: Message Types analyzed: 13.

Service Broker Msg 9676, Level 10, State 1: Service Contracts analyzed: 5.

Service Broker Msg 9667, Level 10, State 1: Services analyzed: 3.

Service Broker Msg 9668, Level 10, State 1: Service Queues analyzed: 3.

Service Broker Msg 9669, Level 10, State 1: Conversation Endpoints analyzed: 0.

Service Broker Msg 9674, Level 10, State 1: Conversation Groups analyzed: 0.

Service Broker Msg 9670, Level 10, State 1: Remote Service Bindings analyzed: 0.

DBCC results for 'sys.sysrowsetcolumns'.

There are 630 rows in 7 pages for object 'sys.sysrowsetcolumns'.

DBCC results for 'sys.sysrowsets'.

There are 97 rows in 1 pages for object 'sys.sysrowsets'.

DBCC results for 'sysallocunits'.

There are 195 rows in 3 pages for object 'sysallocunits'.

There are 0 rows in 0 pages for object "sys.sysasymkeys".

DBCC results for 'sys.syssqlguides'.

There are 0 rows in 0 pages for object "sys.syssqlguides".

DBCC results for 'sys.queue_messages_1977058079'.

There are 0 rows in 0 pages for object "sys.queue_messages_1977058079".

DBCC results for 'sys.queue_messages_2009058193'.

There are 0 rows in 0 pages for object "sys.queue_messages_2009058193".

DBCC results for 'sys.queue_messages_2041058307'.

There are 0 rows in 0 pages for object "sys.queue_messages_2041058307".

CHECKDB found 0 allocation errors and 0 consistency errors in database 'model'.

DBCC execution completed. If DBCC printed error messages, contact your system administrator.

Checking both the current and another database

-- Check the current database.

DBCC CHECKDB;

GO

-- Check the AdventureWorks2012 database without nonclustered indexes.

DBCC CHECKDB (AdventureWorks2012, NOINDEX);

Checking the current database, suppressing informational messages

The following example checks the current database and suppresses all informational messages

DBCC CHECKDB WITH NO_INFOMSGS;

2) Difference between SQL patching in 2005 and 2008 Cluster environment

Installing Service Pack SQL Server 2008 in fail over cluster is very different than the SQL Server 2005 cluster failover.

With SQL Server 2005, when you start installing cluster service pack (or hot fix), it must be launched on the active node (node that hosts the instance). When installing the Setup will launch simultaneously “remote silence” on all passive nodes. All nodes in the cluster containing the SQL Server instance are updated in the same time.

With SQL Server 2008, to reduce the downtime, we have revised the method of deployment. Now if you want to apply a service pack (or hot fix), you must install in first on the passive nodes. The passive nodes are updated before the active node.

Therefore, for your instance SQL Server 2008 in fail over cluster, you must follow the scenario below for the application of Service Pack, Cumulative Update or Hot fix :

1. Apply the hot fix on passive node N 2

2. Reboot the passive node N 2

3. Fail over on SQL resource : the passive node become the active node

4. Apply the hot fix on the passive node N 1

5. Reboot the passive node N 1

2. Reboot the passive node N 2

3. Fail over on SQL resource : the passive node become the active node

4. Apply the hot fix on the passive node N 1

5. Reboot the passive node N 1

3)log shipping Job break with maintenance plan of log backup how to resolve?

if log shipping should not break with Full Backp/ Differential Backup but it will break with Log backup on primary

Step 1

we need to stop maintenance plan of log backupStep 2

To resolve this we need to manually restore backed up of Log backup on maintenance plan on secondary then automatically log backup will resume from next time

4)Which DMV can be used to check how many dirty pages exists in the memory for each database?

We can use the following query, which is based on the sys.dm_os_buffer_descriptors DMV, to see how many dirty pages exist in each database:

SELECT db_name(database_id) AS 'Database',count(page_id) AS 'Dirty Pages'

FROM sys.dm_os_buffer_descriptors WHERE is_modified =1 GROUP BY db_name(database_id)ORDER BY count(page_id) DESC

5)What is SQL Server Transaction Log Architecture

Every SQL Server database has a transaction log that records all transactions and the database modifications that are made by each transaction. The transaction log is a critical component of the database and, if there is a system failure, the transaction log might be required to bring your database back to a consistent state.

The SQL Server transaction log operates logically as if the transaction log is a string of log records. Each log record is identified by a log sequence number (LSN). Each new log record is written to the logical end of the log with an LSN that is higher than the LSN of the record before it. Log records are stored in a serial sequence as they are created. Each log record contains the ID of the transaction that it belongs to. For each transaction, all log records associated with the transaction are individually linked in a chain using backward pointers that speed the rollback of the transaction.

The transaction log in a database maps over one or more physical files. Conceptually, the log file is a string of log records. Physically, the sequence of log records is stored efficiently in the set of physical files that implement the transaction log. There must be at least one log file for each database.

The SQL Server Database Engine divides each physical log file internally into a number of virtual log files. Virtual log files have no fixed size, and there is no fixed number of virtual log files for a physical log file. The Database Engine chooses the size of the virtual log files dynamically while it is creating or extending log files. The Database Engine tries to maintain a small number of virtual files. The size of the virtual files after a log file has been extended is the sum of the size of the existing log and the size of the new file increment. The size or number of virtual log files cannot be configured or set by administrators.

The only time virtual log files affect system performance is if the physical log files are defined by small size and growth_increment values. The size value is the initial size for the log file and the growth_increment value is the amount of space added to the file every time new space is required. If the log files grow to a large size because of many small increments, they will have many virtual log files. This can slow down database startup and also log backup and restore operations. We recommend that you assign log files a size value close to the final size required, and also have a relatively large growth_increment value. For more information about these parameters, see ALTER DATABASE File and Filegroup Options (Transact-SQL).

The transaction log is a wrap-around file. For example, consider a database with one physical log file divided into four virtual log files. When the database is created, the logical log file begins at the start of the physical log file. New log records are added at the end of the logical log and expand toward the end of the physical log. Log truncation frees any virtual logs whose records all appear in front of the minimum recovery log sequence number (MinLSN). The MinLSN is the log sequence number of the oldest log record that is required for a successful database-wide rollback. The transaction log in the example database would look similar to the one in the following illustration.

Transaction Log Physical Architecture

When the end of the logical log reaches the end of the physical log file, the new log records wrap around to the start of the physical log file.

What are the permissions required to view execution plans?

Either the user must be mapped to sysadmin, db_owner, db_creator or they will be granted the permission, “Show Plan”.GRANT SHOWPLAN TO [username]

What's the difference between peer-to-peer and merge replication with SQL Server?

- Peer-to-Peer Transactional Replication is typically used to support applications that distribute read operations across a number of server nodes.

Although peer-to-peer replication enables scaling out of read operations, write performance for the topology is like that for a single node, this is because ultimately all inserts, updates, and deletes are propagated to all nodes. If one of the nodes in the system fails, an application layer can redirect the writes for that node to another node, this is not a requirement but does maintain availability if a node fails.

- Merge Replication is bi-directional i.e. read and write operations are propagated to and from all nodes. Merge replication often requires the implementation of conflict resolution.

https://technet.microsoft.com/en-us/library/ms151794(v=sql.105).aspx

In Transaction Replication first we have to create Distribution then Publisher and finally Subscribers.

My Server Information:

Publishing database name: AdventureWorks

Distributor Server Name: D1\DISTRIBUTOR

Publisher Server name: P1\PUBLISHER

Subscriber Server Name: S1\SUBSCRIBER

Publisher Name: Publisher1

1.Distribution Server Jobs:

Here is the list of jobs will create once Distribution Server is configured. Following jobs will create in Distribution server only

1. Agent history clean up: distribution

2. Distribution clean up: distribution

3. Expired subscription clean up

4. Reinitialize subscriptions having data validation failures

5. Replication agents checkup

6. Replication monitoring refresher for distribution.

7. S1\SUBSCRIBER-AdventureWorks-Publisher1-S1\SUBSCRIBER-3

2.Publisher Server Jobs:

Once Distributor is Configured successfully then we have to configure Publisher. The following jobs will create in Publisher server only

Once Distributor is Configured successfully then we have to configure Publisher. The following jobs will create in Publisher server only

1. P1\PUBLISHER-AdventureWorks-1

2. P1\PUBLISHER-AdventureWorks-Publisher1-1

3. Subscriber Server Jobs.

In Subscriber no jobs will create, only in Distributor one job will create while adding subscriber.

S1\SUBSCRIBER-AdventureWorks-Publisher1-NARESH\SUBSCRIBER-3

How to use SQL Server Upgrade Advisor helps you prepare for upgrades

from SQL2005/08/08 R2/2012 to SQL Server 2014

Ref:

https://msdn.microsoft.com/en-us/library/ms144256(v=sql.120).aspx

SQL Server Upgrade Advisor helps you prepare for upgrades to SQL Server 2014. Upgrade Advisor analyzes installed components from earlier versions of SQL Server, and then generates a report that identifies issues to fix either before or after you upgrade.

you run Upgrade Advisor, the Upgrade Advisor Home page appears. From the Home page, you can run the following tools:

- Upgrade Advisor Analysis Wizard

- Upgrade Advisor Report Viewer

- Upgrade Advisor Help

The first time that you use Upgrade Advisor, run the Upgrade Advisor Analysis Wizard to analyze SQL Server components. When the wizard finishes the analysis, view the resulting reports in the Upgrade Advisor Report Viewer. Each report provides links to information in Upgrade Advisor Help that will help you fix or reduce the effect of the known issues.

Upgrade Advisor analyzes the following SQL Server components:

- Database Engine

- Analysis Services

- Reporting Services

- Integration Services

The analysis examines objects that can be accessed, such as scripts, stored procedures, triggers, and trace files. Upgrade Advisor cannot analyze desktop applications or encrypted stored procedures.

Output is in the form of an XML report. View the XML report by using the Upgrade Advisor report viewer.

Upgrade Advisor is available in a feature pack.

Prerequisites for installing and running Upgrade Advisor are as follows:

- Windows Server 2008 SP2, Windows 7 SP1, and Windows Server 2008 R2 SP1.

- Windows Installer beginning with version 4.5.

after you install Upgrade Advisor, you can open it from the Start menu:

- Click Start, point to All Programs, point to Microsoft SQL Server 2014, and then click SQL Server 2014 Upgrade Advisor.

Consider the scenario you have 500 GB large DB you need migrate to other server

what is minimum downtime perform this activity using backup/restore?

Backup method we can ensure use Backup with compression method It will reduce size also restore to other server easily

Can we install SQL server 2008 R2 on Windows server 2012?

Ref:

https://support.microsoft.com/en-us/help/2681562/using-sql-server-in-windows-8-and-later-versions-of-windows-operating-system

SQL 2008 R2 is supported on Windows 2012 but you need to apply the latest service packs for your SQL server version after you install SQL.

SQL Server

|

Windows 10 or Windows Server 2016

|

Windows server 2012/2012 R2

|

Windows 8

/Windows 8.1

|

Windows 7

|

SQL2016

|

SQL Server 2016 RTM

|

SQL Server 2016 RTM

|

SQL Server 2016 RTM

|

Not supported

|

SQL2014

|

SQL Server 2014 Service Pack 1 or a later update.

|

SQL Server 2014 Service Pack 1 or a later update.

|

SQL Server 2014 Service Pack 1 or a later update.

|

Supported

|

SQL2012

|

SQL Server 2012 Service Pack 2 or a later update

|

You can install the release version of SQL Server 2012 or a later version.

|

SQL Server 2012 Service Pack 2 or a later update

|

Supported

|

SQL2008 R2

|

SQL Server 2008 R2 is not supported on Windows 10 or Windows Server 2016.

|

Microsoft SQL Server 2008 R2 Service Pack 1 or a later update.

|

Microsoft SQL Server 2008 R2 Service Pack 1 or a later update.

|

Supported

|

SQL2008

|

SQL Server 2008 is not supported on Windows 10 or Windows Server 2016.

|

You must apply SQL Server 2008 Service Pack 3 or a later update.

|

You must apply SQL Server 2008 Service Pack 3 or a later update.

|

Supported

|

SQL2005

|

Microsoft SQL Server 2005 (the release version and service packs) and earlier versions of SQL Server are not supported on Windows 10

|

Microsoft SQL Server 2005 (the release version and service packs) and earlier versions of SQL Server are not supported on Windows 2012

|

Microsoft SQL Server 2005 (the release version and service packs) and earlier versions of SQL Server are not supported on Windows 8/8.1

|

Supported

|

Note:

Microsoft SQL Server 2005 (the release version and service packs) and earlier versions of SQL Server are not supported on Windows 10, Windows Server 2016, Windows Server 2012 R2, Windows Server 2012, Windows 8.1, or Windows 8. You will receive a warning in the Action Center if Windows 10, Windows 8.1, or Windows 8 detects an instance of SQL Server 2005.

Extended support for SQL Server 2005 ended on April 12, 2016If you are still running SQL Server 2005, you will no longer receive security updates and technical support. We recommend upgrading to SQL Server 2014 and Azure SQL Database to achieve breakthrough performance,

What are the New Features Introduced in SQL 2014

In-Memory OLTP Engine

http://sqlmag.com/sql-server-2014/sql-server-2014-important-new-features

In-Memory OLTP engine is designed for high concurrency and uses a new optimistic concurrency control mechanism to eliminate locking delays

In-Memory OLTP engine is supported on Windows Server 2012 R2, Windows Server 2012, and Windows Server 2008 R2 SP2. In addition, you need to be using the SQL Server 2014 Enterprise, Developer, or Evaluation edition. Notably, In-Memory OLTP won't be supported on the SQL Server 2014 Standard edition.

The In-Memory OLTP engine works with commodity server hardware, but it has a number of limitations. For instance, not all of the data types are supported. Some of the data types that aren't supported for memory-optimized tables include geography, hierarchyid, image, text, ntext, varchar(max), and xml. In addition, several database features can't be used with the new In-Memory OLTP capability. Database mirroring, snapshots, computed columns, triggers, clustered indexes, identity columns, FILESTREAM storage, and FOREIGN KEY, CHECK, and UNIQUE constraints aren't supported.

Enhanced Windows Server 2012 Integration

SQL Server 2014 provides improved integration with Windows Server 2012 R2 and Windows Server 2012. SQL Server 2014 will have the ability to scale up to 640 logical processors and 4TB of memory in a physical environment. It can scale up to 64 virtual processors and 1TB of memory when running on a virtual machine (VM).

SQL Server 2014 also provides a new solid state disk (SSD) integration capability that enables you to use SSD storage to expand SQL Server 2014's buffer pool. The new buffer pool enhancements can help increase performance in systems that have maxed out their memory capability by using high-speed nonvolatile RAM (NVRAM) in the SSD drives as an extension to SQL Server 2014's standard buffer pool. The new buffer pool extensions can provide the best performance gains for read-heavy OLTP workloads.

Enhancements to AlwaysOn Availability Groups

SQL Server 2014's AlwaysOn Availability Groups has been enhanced with support for additional secondary replicas and Windows Azure integration. First introduced with SQL Server 2012, AlwaysOn Availability Groups boosted SQL Server availability by providing the ability to protect multiple databases with up to four secondary replicas. In SQL Server 2014, Microsoft has enhanced AlwaysOn integration by expanding the maximum number of secondary replicas from four to eight. Readable secondary replicas are now available for read-only workloads, even when the primary replica is unavailable. SQL Server 2014 also provides Windows Azure AlwaysOn integration. This new integration feature enables you to create asynchronous availability group replicas in Windows Azure for disaster recovery. In the event of a local database outage, you can run your SQL Server databases from Windows Azure VMs. The new Windows Azure AlwaysOn availability options are fully integrated into SQL Server Management Studio (SSMS).

Enhancements to Backups

Database backups in SQL Server now support built-in database encryption. Previous releases all required a third-party product to encrypt database backups. The backup encryption process uses either a certificate or an asymmetric key to encrypt the data. The supported backup encryption algorithms are Advanced Encryption Standard (AES) 128, AES 192, AES 256, and Triple DES (3DES).

SQL Server 2014 also provides new Windows Azure integration to SQL Server's backup capabilities. You can specify a Windows Azure URL as the target for your SQL Server 2014 database backups. This new Windows Azure backup feature is fully integrated into SSMS.

Updateable Columnstore Indexes

Columnstore indexes are another of Microsoft's high performance in-memory technologies. Microsoft introduced the columnstore index in SQL Server 2012 to provide significantly improved performance for data warehousing types of queries. Microsoft states that for some types of queries, columnstore indexes can provide up to 10x performance improvements. However, in the original implementation of the columnstore indexes, the underlying table had to be read-only. SQL Server 2014 eliminates this restriction. The new updateable columnstore index enables updates to be performed to the underlying table without first needing to drop the columnstore index. A SQL Server 2014 columnstore index must use all of the columns in the table, and it can't be combined with other indexes.

SQL Server Data Tools for Business Intelligence

Previously known as Business Intelligence Development Studio (BIDS) and SQL Server Data Tools (SSDT), the new SQL Server Data Tools for BI (SSDT BI) is used to create SQL Server Analysis Services (SSAS) models, SQL Server Reporting Services (SSRS) reports, and SQL Server Integration Services (SSIS) packages. SSDT BI is based on Microsoft Visual Studio 2012 and supports SSAS and SSRS for SQL Server 2014 and earlier, but SSIS projects are limited to SQL Server 2014. In the pre-release version of SQL Server 2014 Community Technology Preview 2 (CTP2), SQL Server Setup doesn't install SSDT BI. Instead, you must download it separately from the Microsoft Download Center.

Power BI for Office 365 Integration

Power BI for Office 365 is Microsoft's cloud-based BI solution that leverages familiar Office 365 and Excel tools. Power BI for Office 365 provides business insights through data visualization and navigation capabilities. Power BI for Office 365 includes:

- Power Pivot (formerly PowerPivot). This Excel 2010 and Excel 2013 ProPlus add-in enables Excel to perform data analysis on large volumes of data.

- Power View. This Excel 2013 ProPlus add-in provides a Silverlight-based data visualization and navigation tool. Microsoft has extended Power View so that you can now use it with multidimensional models (OLAP cubes). Power View multidimensional models also support queries using Data Analysis Expressions (DAX). Power View's data visualization capabilities have also been enhanced. Power View now supports a number of data visualizations, including tables, matrixes, bubble charts, and geographical maps. To learn more about Power View's new multidimensional support, go to MSDN's Power View for Multidimensional Models web page.

- Power Query (formerly code-named Data Explorer). This Excel 2013 add-in lets you discover and integrate data into Excel. It supports SQL Server data sources as well as external sources such as Windows Azure, text files, XML files, Open Data Protocol (OData) feeds, web pages, Hadoop data sets, and public data sets (e.g., Open Government data from data.gov).

- Power Map (formerly code-named GeoFlow). This Excel 2013 ProPlus add-in provides 3D mapping visualizations.

Also see discontinued features on SQL 2014

https://msdn.microsoft.com/en-us/library/ms144262(v=sql.120).aspx

What's the difference between peer-to-peer replication and merge replication using SQL Server?

- Peer-to-Peer Transactional Replication is typically used to support applications that distribute read operations across a number of server nodes.Peer-to-Peer Replication provides bidirectional synchronization, however, it requires all nodes to be Enterprise Edition

Although peer-to-peer replication enables scaling out of read operations, write performance for the topology is like that for a single node, this is because ultimately all inserts, updates, and deletes are propagated to all nodes. If one of the nodes in the system fails, an application layer can redirect the writes for that node to another node, this is not a requirement but does maintain availability if a node fails.

- Merge Replication is bi-directional i.e. read and write operations are propagated to and from all nodes. Merge replication often requires the implementation of conflict resolution.

what is the difference between 32 bit SQL Server and 64 bit SQL Server Version?

http://searchsqlserver.techtarget.com/tip/32-bit-vs-the-64-bit-SQL-Server-performance-surge

The first main advantage 64-bit provides for SQL Server is the ability to directly deal with larger amounts of memory. The 32-bit system can directly address 4 GB of memory at the most; the only way to get to more memory than that in a 32-bit system is by using techniques like Address Windowing Extensions. Though it will allow you to use anywhere from 32 GB to 64 GB of RAM, it will cost you in performance. It's not possible to use much of this memory in a truly contiguous fashion -- it's often only useful for the sake of caching data pages, not performing actual work.

A 64-bit system, on the other hand, can directly address 1,024 gigabytes of physical memory, so the amount of data SQL Server can cache, hold directly in memory and perform live operations on, is larger by whole orders of magnitude. Operations that might take a long time or would require a lot of work in temporary tables in a 32-bit system can be done in-memory and much faster on a 64-bit system, since there's a lot less paging or blocking going on.

Sixty-four-bit environments also have the ability to support up to 64 processors. SQL Server was originally written to parallelize work as heavily as possible across physical and virtual processors, and in existing 32-bit setups it does so very effectively. If you move an existing SQL Server workload from a 32-bit, four-way system to, say, a 64-bit, 16-way system, the work will not only be spread out across more individual CPUs but also will be handled all the more efficiently.

The difference between 64-bit and 32-bit is not speed. 64-bit is not necessarily faster.

The main difference is that a 32-bit app can never address more than 4 GB of memory,

while a 64-bit app can address memory up to 16 exabytes or so.

The main difference is that a 32-bit app can never address more than 4 GB of memory,

while a 64-bit app can address memory up to 16 exabytes or so.

What is the difference between SQL server 2005 32 bit and 64 bit.

Do we need to have OS 64 bit to install SQL 64 bit.

Can we restore 32 bit version database in 64 bit version and 64 bit db in 32 bit??

Do we need to have OS 64 bit to install SQL 64 bit.

Can we restore 32 bit version database in 64 bit version and 64 bit db in 32 bit??

Ans : The database file formats are identical, as are the backup formats. You need a 64-bit OS to install 64-bit SQL.

Unless you’re talking about Itanium, a 64-bit server with 64-bit OS will run 32-bit software just fine.

The difference between 64-bit and 32-bit is not speed. 64-bit is not necessarily faster.

Unless you’re talking about Itanium, a 64-bit server with 64-bit OS will run 32-bit software just fine.

The difference between 64-bit and 32-bit is not speed. 64-bit is not necessarily faster.

The main difference is that a 32-bit app can never address more than 4 GB of memory,

while a 64-bit app can address memory up to 16 exabytes or so.

What is Transaction level in SQL Server

Transaction Level:

Read Uncommitted (Low)

Read Committed(Default)

Repeatable Read

Serializable (High)

Common concurrency Problems

Dirty Reads

Lost Updates

Non Repeatable Read

Phantom Reads

Isolation Level

|

Dirty Reads

|

Lost Update

|

Non Repeatable Reads

|

Phantom Reads

|

Read Uncommitted

|

Yes

|

Yes

|

Yes

|

Yes

|

Read Committed

|

No

|

Yes

|

Yes

|

Yes

|

Repeatable Read

|

No

|

No

|

No

|

Yes

|

Snapshot

|

No

|

No

|

No

|

No

|

Serializable

|

No

|

No

|

No

|

No

|

What is difference between Serializable and Snapshot isolation level since does not have any concurrency side effects above table

Snapshot isolation does not acquire locks it maintains versioning in tempdb since snapshot isolation does not lock resources, it can significantly increase the number of concurrent transactions while providing the same level of data consistency as serializable isolation does

Read Committed(Default):

we can see committed data if one transaction update record on table of one column at that same time another transaction is trying to update same records it blocked.it needs to wait for first process to complete once complete and committed then we can read the data

Read Uncommitted

One transaction is permitted to read data that has been modified by another transaction that has not yet been committed. so we can get dirty read. we also get same using No Lock Hint option

Repeatable Read

Repeatable read isolation level ensures that the data that one transaction has read will be prevented from being updated or deleted by any other transaction

but it does not prevent new rows from being inserted by other transactions resulting in phantom read concurrency problem.

Snapshot

it will take snapshot of committed records before other transaction modified.

It maintains versioning in tempdb since snapshot isolation does not lock resources

Serializable

Serializable isolation level ensure that the data that one transaction has read, will be prevented from being updated or deleted by any other transaction. It also prevents new rows from being inserted by other transactions, so this isolation level prevents both non-repeatable read and phantom read problems. it acquire locks

Dirty Reads

A dirty read Happens when one transaction is permitted to read data that has been modified by another transaction that has not yet been committed.

if the first transaction is rolled back after the second reads the data the second transaction has dirty data that does not exists any more

Phantom Reads

Phantom Read happens when one transaction executes a query twice and get a different number of rows in the result set each time. This happens when a second transaction inserts a new row that matches the where clause of the query execute by the first transaction

Transaction 1

select * from emp where id between 1 and 3 (2 rows returned)

do some other work/wait--Wait for Delay '00:00:10'

select * from emp where id between 1 and 3 (3 rows returned)

Transaction 2

insert a new emp record at the time of Transaction do some other work/wait

To resolve this we can issue serializable isolation level at transaction 1

this will place range lock between 1 and 3 it prevents any other transaction from inserting new rows with in that range

What is pre step and post step do you perform for Migration from SQL 2008 to SQL 2012

In place method

we need to take system db copy on source and upgrade to existing it more risks cant be roll back

Side by Side Method

Pre check

1.We need to take Backup of user database on source (SQL 2008) don't need to take system db backup

2.We need to script out logins on source (SQL 2008) using sp_help_revlogin script

3.We need to script out Jobs,Linked servers,Replication,Operators,Triggers

4. We need to configure Database Mail on destination we need to take SMTP info on notepad

Process

1. We need to use Restore method to do on Destination (SQL 2014)

2. Change compatibility level as per SQL 2014 on each database

3. We need to set collation on each database

4. we need to apply all logins,Jobs,Linked servers,Replication,Operators,Triggers

5. Solve orphan users if possible

Post Check

1. We need to set Memory settings as per new machine RAM size

2. We need to create tempdb ndf file as per core

3. We Need to check linked servers,logins are working fine or not

4. we need to check application connectivity is working fine or not

Top 10 New Features in SQL Server 2016

Microsoft is releasing new and exciting features with new versions over the time. SQL Server 2016 also has lots of new features for database users and it has been reformed and made database users life easier. Real time operational analytics is amazing which is up to 30x fatser transactions with in-memory OLTP which performs operations from minutes to seconds. Here I am covering 10 new features in SQL Server 2016 which I think is great for database users though there are tons of new features which we will see in future articles in detail.

1. Stretch Databases

2. Always Encrypted

3. Dynamic Data Masking

4. Row Level Security

5. Multiple TempDB

6. JSON Support

7. PolyBase

8. Temporal Tables

9. Query Store

10. Advanced Analytics

2. Always Encrypted

3. Dynamic Data Masking

4. Row Level Security

5. Multiple TempDB

6. JSON Support

7. PolyBase

8. Temporal Tables

9. Query Store

10. Advanced Analytics

1. Stretch Databases

With this new and exciting feature you can stretch or extend the storage of on-premise database to Microsoft Azure and can retain data for longer time without any table break as normally we create historical tables for huge tables. It minimizes storage cost as you can control it over Azure. Once data is stretched to cloud, data movement will be handled in background which totally query-able without any changes to applications. The major benefit is Azure storage is 80% less expensive and reduces on-premises maintenance.

2. Always Encrypted

SQL Server 2016 includes enhanced security layer as ‘Always Encrypted’ feature which encrypts the data in motion, in memory and at rest without impacting performance. Microsoft has tried to protect sensitive data like bank details, credit card details and other critical data among data owners, data managers, on-premise DBAs, Cloud Operators and unauthorized users etc. Always Encrypted driver automatically encrypts and decrypts sensitive data in client applications. Sensitive columns data are encrypted before passing to database SQL Server engine and maintains semantics.

3. Dynamic Data Masking

This feature in SQL Server 2016 helps to prevent unauthorized access to confidential and sensitive data where client can control over limits of data to mask without impacting application layer. The masking is applied on query output without modifying existing queries and protects data which clients don’t want to reveal. This feature is just an added advantage with existing available features in previous versions of SQL Server like Auditing, Encryption etc.

4. Row Level Security (RLS)

SQL Server 2016 has introduced new feature to uniquely control and protect data at row level called Row Level Security (R L S). You can restrict data access from particular users with total transparency with applications. This is again one more added advantage to control access at more granular level than GRANT, REVOKE and DENY permissions.

5. Multiple TempDB

As we know tempdb is re-created every time SQL Server is restarted and temporary objects are dropped automatically and backup or restore activity is not allowed on tempdb. In SQL Server 2016 you can setup the tempdb data files at Database Engine Configuration step during installation which automatically creates default number of files with respect to number of logical processors on the server up to a maximum of 8 tempdb files on servers having 8 or more cores. Performance is also taken care and temporary tables and variables caching allows drop and create activity very fast with reduced page allocation contention. Trace flag 1117 and 1118 is not required now and all tempdb files will auto grow at the same time.

6. JSON Support

Java Script Object Notation also called JSON in short, is one of the most commonly used data exchange format. Normally web browsers and other recent services use JSON text format. So there was a huge request for SQL Server to handle and process JSON text. Microsoft added this in-built feature to parse and process JSON formatted data and to query JSON data stored and to exchange relational data in SQL Server.

7. PolyBase

This feature allows you to use T-SQL statements to query Hadoop clusters or Azure Blob Storage and can create queries that joins semi-structured data with SQL Server relational data sets. PolyBase is a inbuilt feature of SQL Server 2016 and you do not need understanding of Java or other Hadoop related concepts or any extra tools. PolyBase concept is not new to us as this concept is already introduced in Microsoft’s massive Parallel Processing (MPP) appliance called Parallel Data Warehouse (PDW).

8. Temporal Tables

Temporal table is a new feature in SQL Server 2016, it is also called System Versioned tables which automatically keeps history of data in the table. The temporal table is physically a different table then the base table and you can use it as row versioning solution also. Temporal table allows you to query records which have been updated or deleted and regular table will return current data only. This feature is useful for auditing, versioning, monitoring and recovering modified data from history.

9. Query Store

Query Store as the name suggests, it captures history of queries, execution plans and run time statistics which we can review for performance. You can easily find performance variations caused by query plan changes and improve the same. Prior to SQL Server 2016 you must have seen execution plan by using DMVs which are available in plan cache but now historical execution plan will also be available for review.

10. Advanced Analytics

SQL Server 2016 comes with enhanced analysis with R language. Revolution Analytics is statistical open source company which Microsoft purchased recently. Now users don’t need to export data to run analysis in R as it is incorporated with SQL Server. R is built-in to your T-SQL including end-to-end mobile BI, high performance DW, mission critical OLTP, multi-threading and massive parallel processing with in-memory.

What is Buffer Pool Extension (BPE)in SQL 2014?

provide the best performance gains for read-heavy OLTP workloads

Increases random I/O Processes with reduced Latency

Only clean pages are written to BPE

Wait type used for BPE I/O to completed is EC

Significantly slow down performance for large serial range scans

SQL Server 2014 also provides a new solid state disk (SSD) integration capability that enables you to use SSD storage to expand SQL Server 2014's buffer pool. The new buffer pool enhancements can help increase performance in systems that have maxed out their memory capability by using high-speed nonvolatile RAM (N V RAM) in the SSD drives as an extension to SQL Server 2014's standard buffer pool. The new buffer pool extensions can provide the best performance gains for read-heavy OLTP workloads

What is Parallelism and MaxDOP (Maximum Degree of Parallelism) in SQL Server

Parallelism:

Parallelism takes a query and breaks up the tasks needed to complete the query in smaller bits and then assigns those tasks to different worker threads

MaxDOP:

The number of additional threads assigned to the query is controlled by the Max Degree of Parallelism setting

Max DOP controls the amount of cores

Cost threshold for parallelism control when Max DOP kicks in

How to set Max DOP:

Server name and click on properties and click and Advanced tab and under Parallelism

change the max degree of parallelism setting

To the number of cores you want to allow a query to use

What are the IO affinity / CPU affinity in SQL Server

Parallelism:

Parallelism takes a query and breaks up the tasks needed to complete the query in smaller bits and then assigns those tasks to different worker threads

MaxDOP:

The number of additional threads assigned to the query is controlled by the Max Degree of Parallelism setting

parallelism is not occur on following scenario

1) cost of the query is below the cost threshold

2) the SQL instance Max DOP is set to 1

3) Only 1 CPU is assigned to SQL Server

4) Outdated statistics on your data can cause the query optimizer to underestimate the cost of the query

Max DOP controls the amount of cores

Cost threshold for parallelism control when Max DOP kicks in

How to set Max DOP:

Server name and click on properties and click and Advanced tab and under Parallelism

change the max degree of parallelism setting

To the number of cores you want to allow a query to use

What are the IO affinity / CPU affinity in SQL Server

Difference between Merge Replication, Bidirectional Transactional Replication, or Peer-to-Peer Replication

Since writes can occur at both servers, you will need to consider what to do in the event of a conflict. A conflict will occur when the same row/column is changed on 2 different servers between a sync. If possible, it is best to avoid conflicts altogether by partitioning the write operations. One way this can be achieved is by adding a location-specific identifier column to the writable tables and ensure that write operations for a particular row are performed at only one location.

Merge Replication provides bidirectional synchronization and the ability to define static and parameterized row filters to provide a subset of data to be published to subscribers. Merge Replication also provides built-in conflict resolver along with the ability to implement custom conflict resolver.

Bidirectional Transactional Replication provides bidirectional synchronization but does not offer any type of conflict detection or resolution.

Peer-to-Peer Replication provides bidirectional synchronization, however, it requires all nodes to be Enterprise Edition and does not support row or column filtering. Peer-to-Peer Replication has built-in conflict detection but does not offer automatic conflict resolution.

|

Is Push/Pull method used only on snapshot replication?

What are different types of report in SSRS?

No.

It is used on snapshot and transaction and peer to peer replication

A subscription can be defined as push (data is pushed to the subscription database from the Distributor) or pull (data is pulled to the subscription database from the Subscriber). Push subscriptions are more common with snapshot and transactional replication deployments. Pull subscriptions are more common with merge replication because the subscriber might be disconnected more frequently and might need to control when data is refreshed on-demand. The push/pull decision is sometimes also based on the capacity and overhead of the various servers participating in the replication topology.

External executables, which are called replication agents, move the data from the Publisher to the Distributor and then to the Subscriber. The type of replication agent is dependent upon the type of replication being used.

Different Types of Reports in SQL Server Reporting Services (SSRS)

With Reporting Services, you can create the following types of reports:

- Parameterized reports

- Linked reports

- Snapshot reports

- Cached reports

- Ad hoc reports

- Clickthrough reports

- Drilldown reports

- Drillthrough reports

- Subreports

Note: A single report can have characteristics from more than one type; for example, snapshot reports can be parameterized, ad hoc reports incorporate clickthrough report functionality due to the report models upon which they are based, and subreports can be linked reports.

How to restart SQL Server in single user mode?

SQL Server can be started from command line, using the SQLSERVR.EXE. This EXE has some very important parameters with which a DBA should be familiar with. -m is used for starting SQL Server in single user mode and -f is used to start the SQL Server in minimal configuration mode.

How do you troubleshoot SQL Server if its running very slow?

First check the processor and memory usage to see that processor is not above 80% utilization and memory not above 40-45% utilization then check the disk utilization using Performance Monitor, Secondly, use SQL Profiler to check for the users and current SQL activities and jobs running which might be a problem. Third would be to run UPDATE_STATISTICS command to update the indexes

What do you mean by COLLATION?

Difference between Unique Constraint and Unique Index

There are following difference

Which one is the better:

Unique Index (Non-clustered Index) is better than Unique Constraint because it also adds the index which we can use for faster retrieval.

If you don’t require an index on a unique column, you can go with unique Constraint.

Disable:

There are following difference

Which one is the better:

Unique Index (Non-clustered Index) is better than Unique Constraint because it also adds the index which we can use for faster retrieval.

If you don’t require an index on a unique column, you can go with unique Constraint.

Disable:

Unique Constraint: You cannot disable the Unique Constraint, you will get below error:

Msg 4916, Level 16, State 0, Line 1

Could not enable or disable the constraint. See previous errors.

Msg 4916, Level 16, State 0, Line 1

Could not enable or disable the constraint. See previous errors.

Unique Index: You can disable it

1

|

ALTER INDEX Index_name ON Table_name DISABLE

|

Errors: The error messages for both violations are also different

Unique Constraint violation error:

Filters:

Unique Constraint: You cannot add filter in Unique Constraint.

Unique Index: You can add filter in Unique Index:

How to disable the index?

1

2

|

ALTER INDEX index_name ON table_name DISABLE

GO

|

How to enable the index?

1

2

|

ALTER INDEX index_name ON table_name REBUILD

GO

|

How to check the status?

1

2

3

4

5

6

7

8

|

SELECT

name AS IndexName

,type_desc AS IndexType

, CASE IS_DISABLED

WHEN 0 THEN 'Enabled'

ELSE 'Disabled'

END AS IndexStatus

FROM master.sys.Indexes

|

How you are monitoring the size of Transaction Log Files?

see DBCC SQLPERF with the LOGSPACE option, and you can get the transaction log size and usage for all Databases of SQL Server. You can also use DBCC SQLPERF to reset wait and latch statistics.

Command:

1

|

DBCC SQLPERF(LOGSPACE)

|

If Outer transaction ROLLBACK, what happens to an Inner transaction in SQL Server.

You can find the answer in below demonstration so test it yourself.

Create a sample table:

1

2

|

CREATE TABLE tbl_TestTrans(ID INT)

GO

|

Open outer and inner transaction and insert few sample records:

1

2

3

4

5

6

7

8

|

BEGIN TRANSACTION OuterTrans

GO

BEGIN TRANSACTION InnerTrans

GO

INSERT INTO tbl_TestTrans VALUES(88)

GO 1000

COMMIT TRANSACTION InnerTrans

GO

|

Check the count of your table:

1

2

3

4

|

SELECT COUNT(1) FROM tbl_TestTrans

GO

--The result is:

-- 1000

|

Now, Rollback your Outer transactions:

1

2

|

ROLLBACK TRANSACTION OuterTrans

GO

|

Now, Check the count of your table:

Which is 0 now. Although you committed inner transaction for those 1000 records, outer transaction was not committed, so it rollbacked all transactions.

Which is 0 now. Although you committed inner transaction for those 1000 records, outer transaction was not committed, so it rollbacked all transactions.

1

2

3

4

|

SELECT COUNT(1) FROM tbl_TestTrans

GO

--The result is:

-- 0

|

Can we roll back changes of Security Patches upgrade in SQL Server 2005?

No. we can not roll back from SQL 2005 Applied patches. we should need to uninstall and install method

From SQL 2008 We can roll back service pack from view installed updates

No. we can not roll back from SQL 2005 Applied patches. we should need to uninstall and install method

From SQL 2008 We can roll back service pack from view installed updates

UNINSTALLING SQL SERVER 2008/2008R2 SERVICE PACK(S)

Uninstalling Just the Service Pack/CU without uninstalling the entire SQL Server Instance….!! Yes, A definite improvement, Starting SQL Server 2008. In SQL Server 2005 days if you want to Uninstall a Service Pack, basically you’ve to uninstall the entire SQL Server Instance. In other words…we didn’t had Roll Back Capability on any Updates we install over our SQL Instance. Let me explain with a simple Scenario…

You are running on SQL Server 2005 SP3 and because of “End of Support” announced by Microsoft, you decided to apply SP4 over it. You were able to successfully patch the prod Instance with SP4, but all of a sudden your application breaks and users are screaming and you identified the reason – “patching”. Well, you decided to fail over to your DR(BCP) site and meanwhile your plan is “rolling back your production Instance to SP3” so that users and you as a DBA can take a peaceful nap! How do you achieve that??? Unfortunately you’ve to Uninstall your SQL Server and Install your SQL Server 2005 RTM and apply SP3 and restore all of your Databases…and all that hectic work, which really is a very painful process.

Fortunately, starting SQL Server 2008 MSFT offered us a great enhancement – we can Just Uninstall whatever Update we’ve Installed:) yyyyy. So let’s see how to UnInstall Just your Service Pack without loosing your SQL Server Instance!

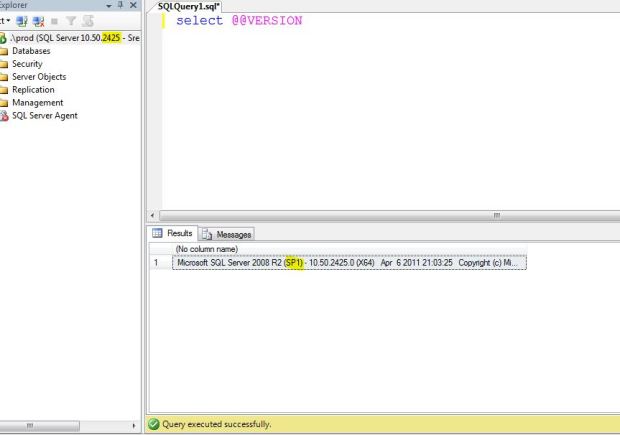

In this Post, I’m going to show you Uninstalling SQL Server 2008R2 SP1 and basically rolling back my Instance to RTM. As of now I’m running SQL Server 2008R2 SP1 as you can see in the below screenshot.

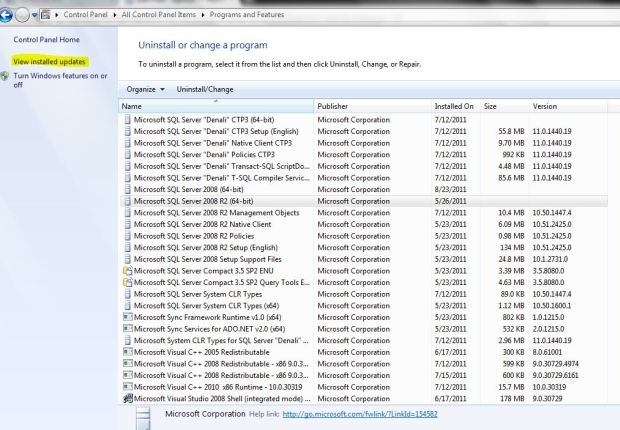

FYI…I’m on Win7 X64 machine. To Uninstall we’ve to go to “Control Panel” and “Programs and Features” and you Will be seeing SQL Server 2008R2 SP1 as any other typical Program which you can Uninstall from Control Panel.

Note: We’ve to select View Installed Updates in order to see this item under your Installed Programs(You can see that highlighted in the below Screenshot.)

As you can see in the above screenshot, there is no SP1 listed. See the below Screenshot once I clicked on View Installed Updates.

All we need to do is just Select that and Click on Uninstall Button or Just Right Click on it and Choose Uninstall. You’ll be automatically welcomed with SQL Server Installation Center as shown below.

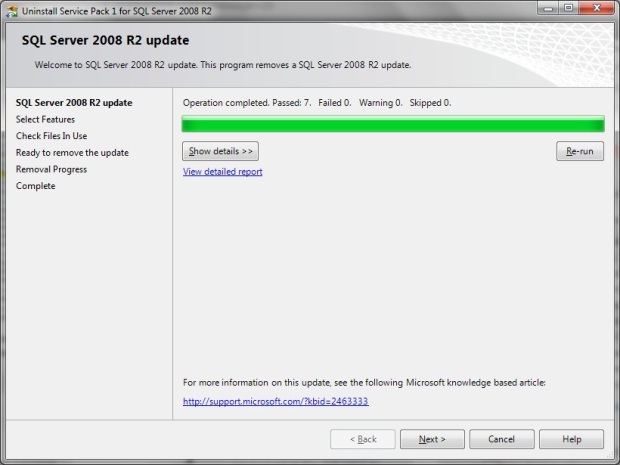

Click Next and As you can see below, I’ve two Instances of SQL server 2008R2 SP1(PROD and DR) on this Machine. I’ll Choose only Prod Instance( you can see below)

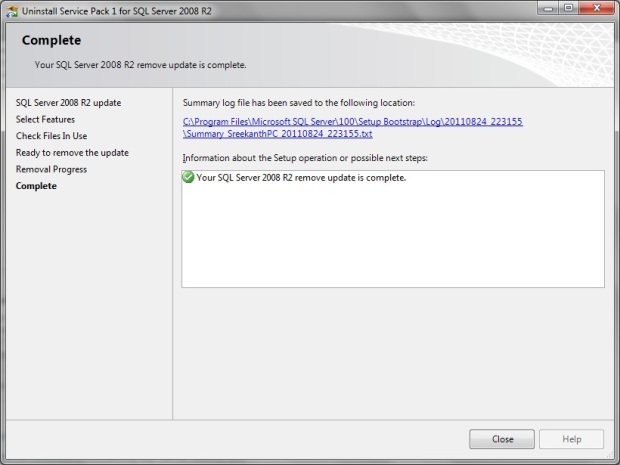

Once you click on Next..It’ll do a file check for any outstanding issues which might prevent un installation, Once you passed the test, It’s matter of Just clicking “NEXT” and “REMOVE”….That’s it guys:). After 4 minutes or so, I got a message “Uninstalled Successfully” as shown below.

See the below Screenshot, my PROD Instance is back to RTM where as my DR Instance is still on SP1

Msg 3702, Level 16, State 3, Line 2

Cannot drop database “DataBaseName” because it is currently in use.

Cannot drop database “DataBaseName” because it is currently in use.

This is a very generic error when DROP Database is command is executed and the database is not dropped. The common mistake user is kept the connection open with this database and trying to drop the database.

The following commands will raise above error:

1

2

3

4

| USE AdventureWorks;GODROP DATABASE AdventureWorks;GO |

The following commands will not raise an error and successfully drop the database:

1

2

3

4

| USE Master;GODROP DATABASE AdventureWorks;GO |

If you want to drop the database use master database first and then drop the database.

What is this port usage 1433 & 5022?

1433 is default port

5022 is Database mirroring

How can you prepare and how should you apply service packs or hotfixes on the Active/Passive SQL Server cluster?

Preparation steps for the production server after you had

already tested the service pack or hotfixes on a development server:

1.

Request a scheduled maintenance window of 1 hour or more.

Usually in the late evenings or weekends, depending on your business.

2.

Once approved, notify the users or required teams of the

scheduled maintenance window.

3.

Download the service pack or hotfixes to a shared drive or to a

local drive.

4.

Backup all databases.

5.

Script out all SQL Server Agent jobs.

6.

Script out all the logins and permissions for the logins.

If your System Administration team has third party software to

take snapshot of the servers, ask them nicely to do so.

Applying the service pack or hotfixes on the Active/Passive SQL

Server cluster:

1.

On the passive node (Node2), apply the service pack or hotfixes.

2.

Reboot the passive node (Node2).

3.

On the active node (Node1), failover the SQL resource. The

passive node (Node2) that you had already patched will become the active node.

4.

On the passive node (Node1), apply the service pack or hotfixes.

5.

Reboot the passive node (Node1).

You can verify the current service pack and version build number

by running the following query:

-- Querying the SQL Server Instance level info

|

||

2

|

SELECT

|

|

3

|

SERVERPROPERTY('ServerName') AS [SQLServer]

|

|

4

|

,SERVERPROPERTY('ProductVersion') AS [VersionBuild]

|

|

5

|

,SERVERPROPERTY

('Edition') AS [Edition]

|

|

6

|

,SERVERPROPERTY('ProductLevel') AS [ProductLevel]

|

|

7

|

,SERVERPROPERTY('IsIntegratedSecurityOnly') AS[IsWindowsAuthOnly]

|

|

8

|

,SERVERPROPERTY('IsClustered') AS [IsClustered]

|

|

9

|

,SERVERPROPERTY('Collation') AS [Collation]

|

||

10

|

,SERVERPROPERTY('ComputerNamePhysicalNetBIOS') AS[CurrentNodeName]

|

||

What is the difference between general distribution and limited

distribution releases?

Recent advancements in

delivering updates to all Microsoft’s customers is the advent of creating

separate development environments in which updates are created from. So what

does this really mean and how does it apply to my computers? Microsoft updates

now offer packages for general distribution release as well as QFE (Quick Fix

Engineering) also known as hotfixes, for specific issues. When you download a

Windows Update you are downloading one of the following:

- Security updates

- Feature packs

- Update roll-ups

- Drivers

- Critical updates

These are GDR (General

Distribution Release) updates. GDR updates can be found on Windows Update

site so as to be available to a wider audience. Another key item is GDRs are

tested to a greater degree than LDRs (Limited Distribution Release). LDR, also

known as hotfixes or QFEs, are created when a hardware, BIOS or a software

issue is identified that needs a fix in a specific and small subset of customer

environments. These fixes have not been tested for the same length of time for

regressions or incompatibilities as what a GDR fix typically goes through.

Another key point is LDRs generally contain all the prior fixes from both GDR

and LDR while GDR releases do not contain LDR fixes

Legend:

CTP

|

Community Technology Preview (beta release)

|

RC

|

Release Candidate

|

RTM

|

Released To

Manufacturing; It is the original,

released build version of the product, i.e. what you get on the DVD or when

you download the ISO file from MSDN.

|

CU

|

Cumulative Update; Cumulative updates contain the bug fixes and

enhancements–up to that point in time–that have been added since the previous

Service Pack release and will be contained in the next service pack release.

Installation of the Cumulative Update is similar to the installation of a Service

Pack. Cumulative Updates are not fully regression tested.

* Since January 27, 2016: Microsoft recommends ongoing, proactive installation of SQL Server CUs as they become available. SQL Server CUs are certified to the same levels as Service Packs, and should be installed with the same level of confidence. |

SP

|

Service Pack; much larger collection of hotfixes that have

been fully regression tested. In some cases delivers product enhancements.

|

GDR

|

General Distribution

Release; GDR fixes should not

contain any of the CU updates.

|

QFE

|

Quick Fix Engineering; QFE updates include CU fixes.

|

What is latest service

Pack in SQL 2012 ? and to know latest service pack in SQL Server

As of now SP3 July

2017

SP4 will release in

Sep 2017

RTM (no SP)

|

SP1

|

SP2

|

SP3

|

SP4

|

|

not yet released

|

|||||

13.0.1601.5

|

|||||

12.0.2000.8

|

|||||

11.0.2100.60

|

|||||

10.50.1600.1

|

|||||

10.0.1600.22

|

|||||

9.0.1399.06

|

|||||

8.0.194

|

|||||

7.0.623

|

https://sqlserverbuilds.blogspot.in/

Can we directly apply

service SP3 on SQL 2008 R2?

Yes we can directly apply service SP3 but if you apply from

RTM version SP3 Cumulative Update 2 (CU2)

You need to apply from RTM to SP3 and then go to apply CU2

How to Apply patches

on Active /Active cluster node

https://www.veritas.com/support/en_US/article.000067494

https://www.red-gate.com/simple-talk/sql/backup-and-recovery/the-sql-server-instance-that-will-not-start/

If

Model DB corrupted, what is the status of SQL Server Instance

If ur master and model DB

is corrupted Your SQL server instance will not start.

In the startup process, once SQL has opened and recovered the master database it needs to bring the other system databases online, starting with TempDB. However, SQL Server cannot simply locate and open the TempDB files and run crash recovery, as it does for the other system databases. TempDB is not like the other databases in the instance in that it's not intended to be a permanent store of data. As such, logging works differently for TempDB; for this database, SQL Server cannot roll transactions forward, which is part of a normal crash recovery. Therefore, instead of simply recovering TempDB on startup, SQL Server must return it to a known state, and the model database provides that known state

what is prerequisite for Cluster and AlwaysON

Difference between cluster and alwayson

No comments:

Post a Comment