SSIS SQL Server Integration Services Basic Concepts and Explanations and Examples:

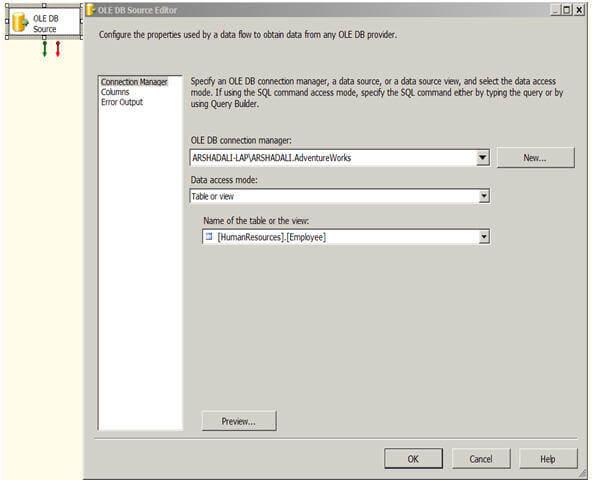

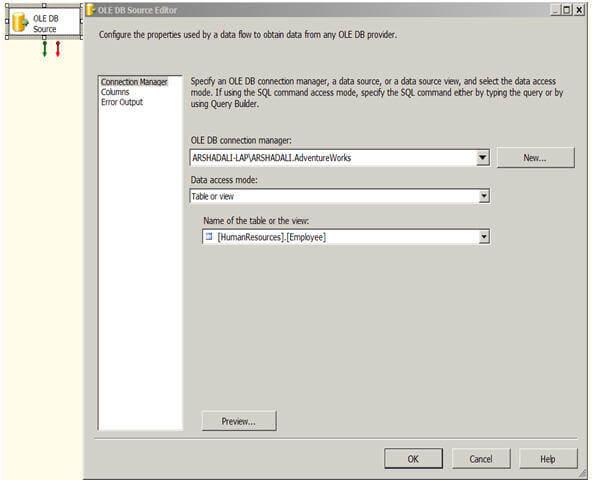

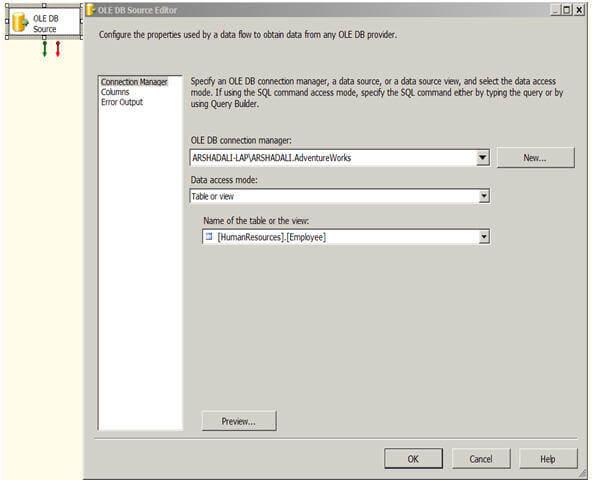

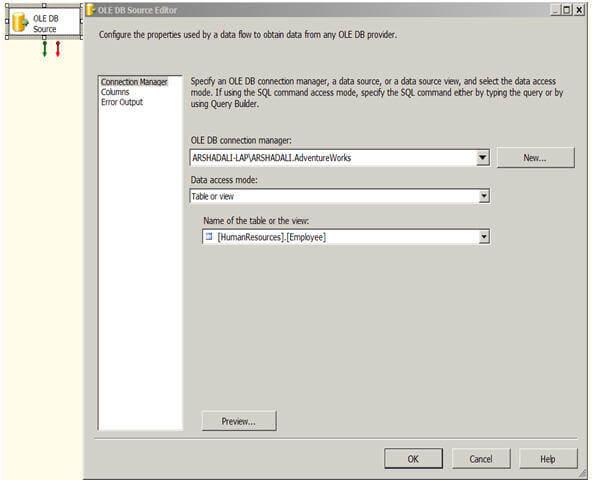

Notice that I’m retrieving a subset of data from the HumanResources.Employee table. The returned dataset includes two columns: BusinessEntityID and NationalIDNumber. We will use the BusinessEntityID column to match rows with the input dataset in order to return a NationalIDNumber value for each employee. Figure 3 shows what your OLE DB Source editor should look like after you’ve configured it with the connection manager and T-SQL statement.

In the first page I mean Component Properties Tab you don’t need to do anything ,select Input Column tab where you will see source available columns check the filepath column.

We have to do one important step here we have to put this LineageID 29 highlighted in above screenshot. Into the Import column Input’s Filepath. Now press ok .

MERGE JOIN Transformation in SSIS:

If you have small number of records and enough memory on machine where you are running the SSIS Package, this can be quick solution. If you have large number of records , These package can take long time to run. As Sort and Aggregate Transformation are blocking transformations. If you have large number of records, You might want to inserted them into database tables and then perform Union operation.

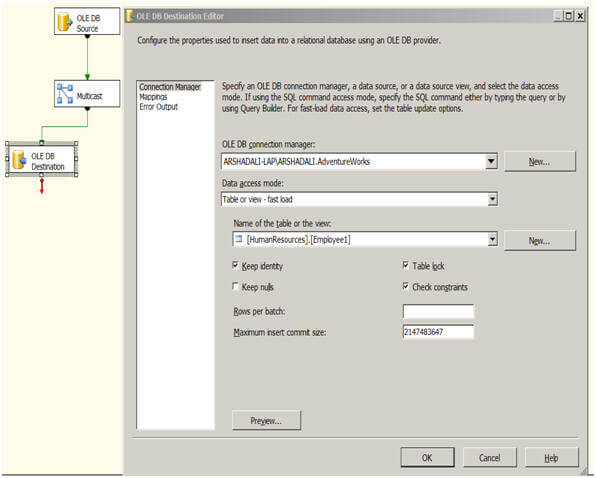

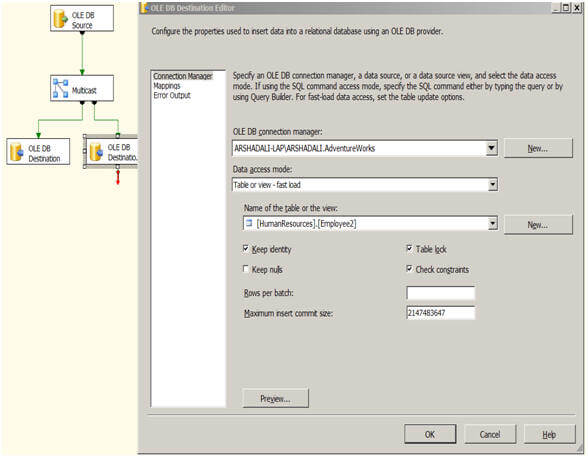

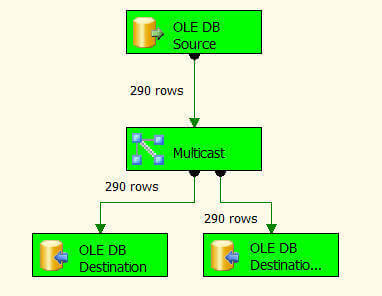

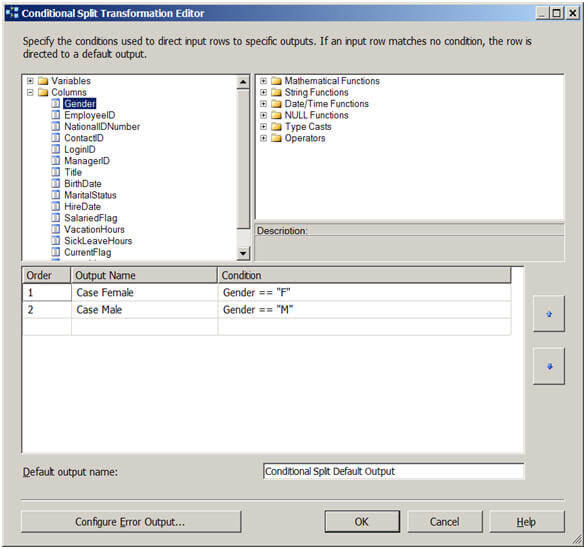

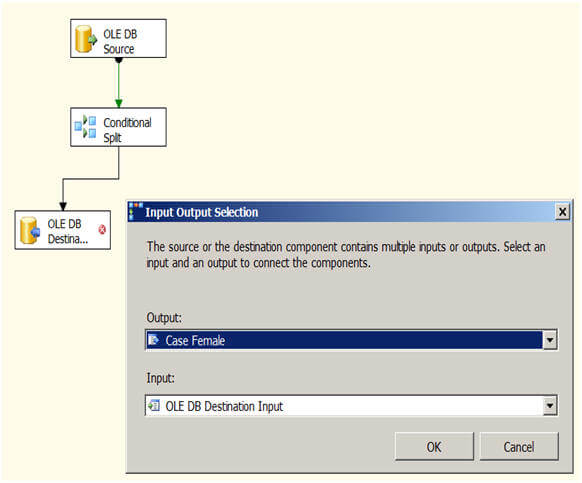

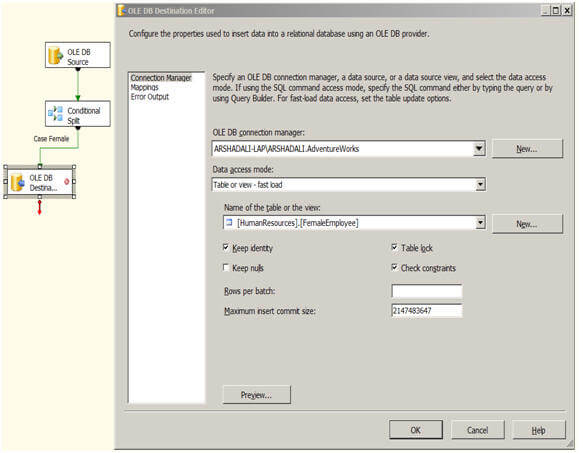

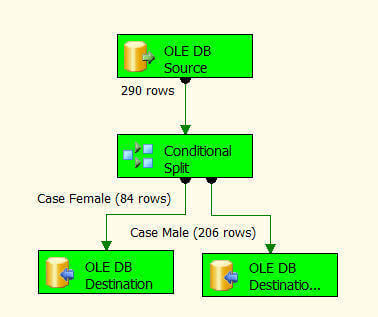

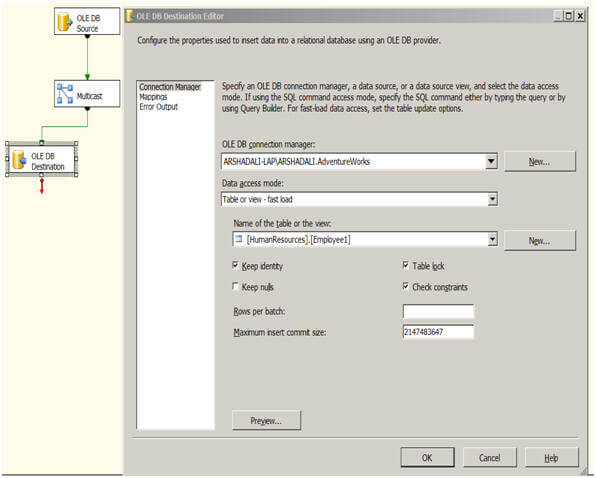

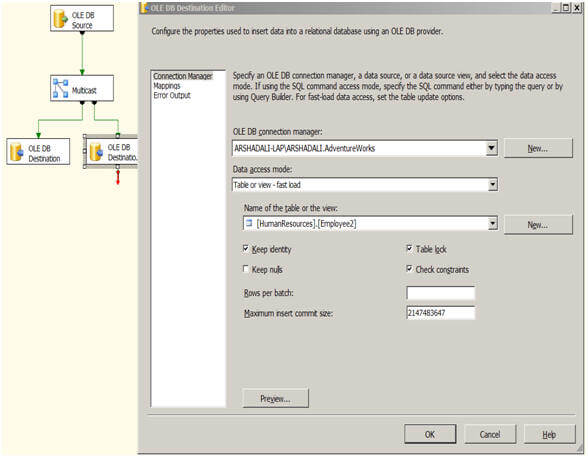

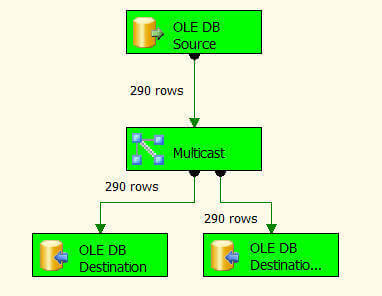

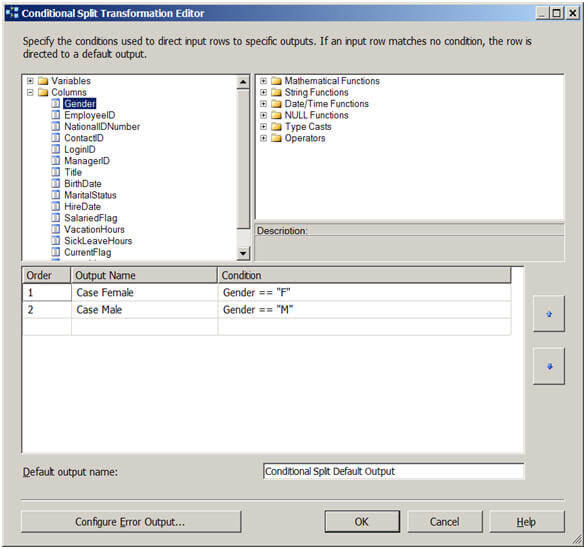

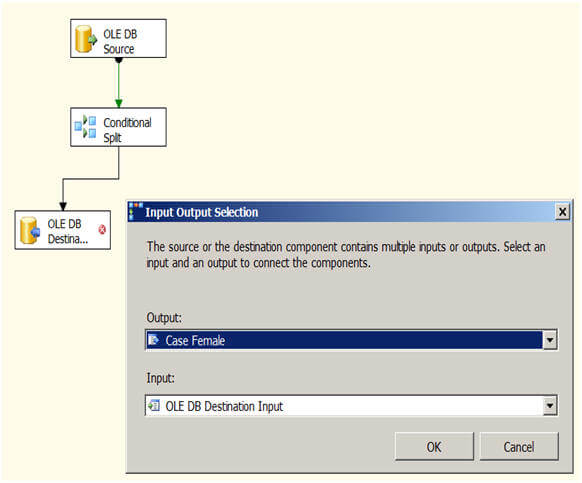

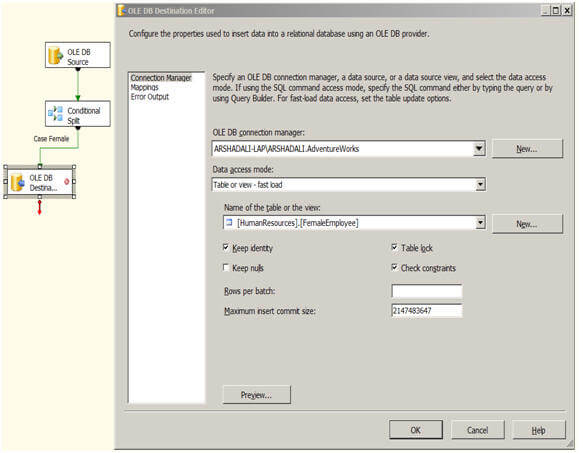

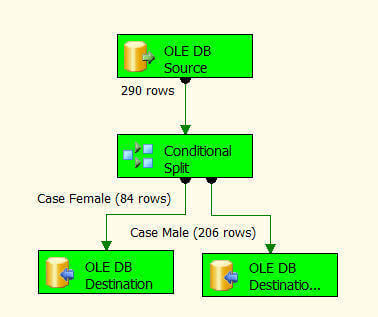

Multicast Transformation generates exact copies of the source data, it means each recipient will have same number of records as the source whereas the Conditional Split Transformation divides the source data on the basis of defined conditions and if no rows match with this defined conditions those rows are put on default output.

In a data warehousing scenario, it's not rare to replicate data of a source table to multiple destination tables, sometimes it's even required to distribute data of a source table to two or more tables depending on some condition. For example splitting data based on location. So how we can achieve this with SSIS?

- The Character Map transformation enables us to modify the contents of character-based columns

- Modified column can be created as a new column or can be replaced with original one

- The following character mappings are available:

--> Lowercase : changes all characters to lowercase

--> Uppercase : changes all characters to uppercase

--> Byte reversal : reverses the byte order of each character

--> Hiragana : maps Katakana characters to Hiragana characters

--> Katakana : maps Hiragana characters to Katakana characters

--> Half width : changes double-byte characters to single-byte characters

--> Full width : changes single-byte characters to double-byte characters

--> Linguistic casing : applies linguistic casing rules instead of system casing rules

--> Simplified Chinese : maps traditional Chinese to simplified Chinese

--> Traditional Chinese : maps simplified Chinese to traditional Chinese

EXAMPLE

Pre-requisite

Following script has to be created in DB

Steps

1. Add Data Flow Task in Control Flow

2. Drag source in DFT and it should point to CharacterMapDemoSource Table.

3. Drag Character Map Transformation and do following settings.

4. We can add new column or we can replace existing one with new Output Alias

5. For each column, we can define different operation

6. Drag Destination and connect it with Character map

7. Records will look something like this.

8. ByteReversal operation basically goes by the byte of a string and reverses all the bytes.

- The Audit transformation lets us add columns that contain information about the package execution to the data flow.

- These audit columns can be stored in the data destination and used to determine when the package was run, where it was run, and so forth

- The following information can be placed in audit columns:

--> Execution instance GUID (a globally unique identifier for a given execution of the package)

--> Execution start time

--> Machine name

--> Package ID (a GUID for the package)

--> Package name

--> Task ID (a GUID for the data flow task)

--> Task name (the name of the data flow task)

--> User name

--> Version ID

EXAMPLE

Pre-requisite

Following script has to be executed in DB

Steps

1. Add Data Flow Task in Control Flow

2. Drag source in DFT and it should point to AggregateDemo

3. Add Audit Transformation and connect it with Source

4. Add Destination and map columns

5. After execution of the package, records will look like this.

Applies to: SQL Server 2012 Standard Edition.

Data profiling is the process of examining the data to obtain statistics about it and use those statistics to better understand the data, to identify problems with the data, and to help properly design ETL processes.

3. Double click the Data Profiling Task on the Common section of the SSIS Toolbox or drag it to Control Flow surface.

4. Configure the Data Profiling Task.

Next, let's provide the connection, table and column on that table that will be analyzed.

Make a click on the Open Profile Viewer button.

https://www.simple-talk.com/sql/ssis/

Ref:

http://www.phpring.com/data-flow-and-its-components-in-ssis/

What do we mean by Data flow in SSIS?

Data flow encapsulates the data flow engine and consists of Source, Transformations and Target. The core strength of in MS SQL Server Integration Services (SSIS) is its capability to extract data into the server’s memory (Extraction), transform it (Transformation) and load it to an alternative destination (Loading). It means the data is fetched from the data sources, manipulated or modified through varioustransformations and loaded into the target destination. The data flow task in SSIS sends the data in series of buffer.

What do we mean by Data flow in SSIS?

Data flow encapsulates the data flow engine and consists of Source, Transformations and Target. The core strength of in MS SQL Server Integration Services (SSIS) is its capability to extract data into the server’s memory (Extraction), transform it (Transformation) and load it to an alternative destination (Loading). It means the data is fetched from the data sources, manipulated or modified through varioustransformations and loaded into the target destination. The data flow task in SSIS sends the data in series of buffer.

Example Scenario – Consider a conveyor belt in a factory. Raw material (Source data) is placed on the conveyor belt and passes through various processes (Transformations). Quality assurancecomponents might reject some material, in which case it can be scrapped (Logged) or fixed and blended back in with the quality material. Eventually, finished goods (Clean and Valid data) arrive at the end of the conveyor belt (Data warehouse).

The first step to implement a Data flow in a package is to add a data flow task to the Control flow of a package. Once the data flow task is included in the control flow of a package, we can start building the data flow of that package.

NOTE: – Arrows connecting the data flow components to create a pipeline are known as Service paths where as arrows connecting components in control flow are known as Precedence constraints. At design time, Data viewers can also be attached to the Service paths to visualize the data.

STEP 1. Creating a Data Flow will include:-

Source(s) to extract data from the databases.

- Adding Connection managers to connect to the data sources.

- Transformations to manipulate or modify the data according to the business need.

- Connecting data flow components by connecting the output of source to transformation and the output of transformation to destination.

- Destination(s) to load the data to data stores.

- Configuring components error outputs.

STEP 2. What are the Components of Data flow?

Components includes –

- Data source(s).

- Transformations.

- Destination(s).

Component 1 – Data Flow Sources

| Data Flow Sources | Description |

| OLE DB Source | Connects to OLE DB data source such as SQL Server, Access, Oracle, or DB2. |

| Excel Source | Receives data from Excel spreadsheets. |

| Flat File Source | Connects to a delimited or fixed-width file. |

| Raw File Source | Do not use connection manager. It produces a specialized binary file format for data that is in transit. |

| XML Source | Do not use connection manager. Retrieves data from an XML document. |

| ADO.NET Source | This source is just like the OLE DB Source but only for ADO.NET based sources. |

| CDC Source | Reads data out of a table that has change data capture (CDC) enabled. Used to retrieve only rows that have changed over duration of time. |

| ODBC Source | Reads data out of table by using an ODBC provider instead of OLE DB. |

Component 2 – Data Flow Transformations

| Transformation Categories | Transformations |

| Row Transformations | Character Map |

| Copy Column | |

| Data Column | |

| Derived Column | |

| OLE DB Command | |

| Rowset Transformations | Aggregate |

| Sort | |

| Pivot/Unpivot | |

| Percentage sampling/Row sampling | |

| Split and Join Transformations | Conditional split |

| Look up | |

| Merge | |

| Merge join | |

| Multicast | |

| union All | |

| Business intelligence transformations | Data Mining Query |

| Fuzzy Look Up | |

| Fuzzy Grouping | |

| Term Extraction | |

| Term Look up | |

| Script Transformations | Script |

| Other Transformations | Audit |

| Cache Transform | |

| Export Column | |

| Import Column | |

| Row Count | |

| Slowly Changing Dimension |

Component 3– Data Flow Destinations

| Data Flow Destinations | Description |

| ADO.NET Destination | Exposes data to other external processes such as a .NET application. |

| Data Reader Destination | Allows the ADO.NET Data Reader interface to consume data, similar to the ADO.NET Destination. |

| OLE DB Destination | Outputs data to an OLE DB data connection like SQL Server, Oracle or Access. |

| Excel Destination | Outputs data from the Data Flow to an Excel spreadsheet. |

| Flat file Destination | Enables you to write data to a comma-delimited or fixed-width file. |

| Raw file Destination | Outputs data in a binary format that can be used later as a Raw File Source. It’s usually used as an intermediate persistence mechanism. |

| ODBC Destination | Outputs data to an OLE DB data connection like SQL Server, Oracle or Access. |

| Record set Destination | Writes the records to an ADO record set. Once written, to an object variable, it can be looped over a variety of ways in SSIS like a Script Task or a Foreach Loop Container. |

| SQL Server Destination | The destination that you use to write data to SQL Server. This destination has many limitations, such as the ability to only write to the SQL Server where the SSIS package is executing. For example – If you’re running a package to copy data from Server 1 to Server 2, then the package must run on Server 2. This destination is largely for backwards compatibility and should not be used. |

This completes the introduction of Data flow and it’s components in SSIS. In our next tutorial we will discuss more about the various transformations categories and there functionalities. I hope this grabs your interest towards Data Flow in SSIS. Your comments are welcome.

Aim – In this post we will learn abot Data Flow Transformation Categories in SSIS. Transformations are defined as a core component in the Data flow of a package in SSIS. It is that part of the data flow to which we apply our business logic to manipulate and modify the input data into the required formatbefore loading it to the destination. All the Data Flow Transformations are broadly classified into 2 types:-

Type 1 – Synchronous Transformations.

Type 2 – Asynchronous Transformations.

What is the difference between Synchronous and Asynchronous transformations?

| Synchronous Transformations | Asynchronous Transformations |

| Processes each incoming row, modifies according to the required format and forward it. | Stores all the rows into the memory before it begins the process of modifying input data to the required output format. |

| No. of input rows = No. of output rows. | No. of input rows != No. of output rows |

| Output rows are in sync with Input rows i.e. 1:1 relationship. | Output rows are not in sync with Input rows |

| Less memory is required as they work on row by row basis. | More memory is required to store the whole data set as input and output buffers do not use the same memory. |

| Does not block the data flow in the pipeline. | Are also known as “Blocking Transformations” as they block the data flow in the pipeline until all the input rows are read into the memory. |

| Runs quite faster due to less memory required. | Runs generally slow as memory requirement is very high. |

| E.g. – Data Conversion Transformation- Input rows flow into the memory buffers and the same buffers come out in the required data format as Output. | E.g. – Sort Transformation- where the component has to process the complete set of rows in a single operation. |

Further Asynchronous Transformations are divided into 2 categories:-

- Partially blocking Transformations creates new memory buffers for the output of the transformation such as the Union All Transformation.

- Fully blocking Transformations performs the same operation but cause a full block of the data such as the Sort and Aggregate Transformations.

Data Flow Transformation Categories are as follows:-

- This transformation is used to update column values or create new columns.

- It transforms each row present in the pipeline (Input).

| Transformation Name | Description |

| Character Map | Modifies strings, typically for changes involving code pages. |

| Copy Column | Copies columns to new output columns. |

| Data Conversion | Performs data casting. |

| Derived Column | Allows the definition of new columns, or the overriding of values in existing columns, based on expressions. |

| OLE DB Command | Executes a command against a connection manager for each row. This transformation can behave as a destination. |

2. Rowset Transformations –

These transformations are also called Asynchronous as they “dam the flow” of data i.e. Stores all the rows into the memory before it begins the process of modifying input data to the required output format.

- As a result, a block is caused in the pipeline of data until the operation is completed.

| Transformation Name | Description |

| Aggregate | Aggregates (summarizes) numeric columns |

| Percentage Sampling | Outputs a configured percentage of rows |

| Row Sampling | Outputs a configured number of rows |

| Sort | Sorts the data, and can be configured to remove duplicates. |

| Pivot | Pivots the data |

| Unpivot | Unpivots the data |

3. Split and join Transformations –

- Distribute rows to different outputs.

- Create copies of the transformation inputs.

- Join multiple inputs into one output.

| Transformation Name | Description |

| Condition Split | Uses conditions to allocate rows to multiple outputs. |

| Look up | Performs a look up against a reference set of data. Typically used in the fact table for loading packages. |

| Merge | Unions two sorted inputs and retains sort order in the output. |

| Merge Join | Joins two sorted inputs, and can be configured as Inner, Left Outer or Full Outer. |

| Multicast | Broadcasts (duplicates) the rows to multiple outputs. |

| Union All | Unions two or more inputs to produce a single output. |

4. Business Intelligence Transformations –

These are used to introduce data mining capabilities and data cleansing.

- Cleaning data includes identification and removal of duplicate rows based on approximate matches.

- These are only available with the Enterprise Edition

5. Script Transformations –

Extends the capabilities of the data flow.

- Delivers optimized performance because it is precompiled.

- Similar to the Script Task, it delivers the ability to introduce custom logic into the data flow using VB.NET or C#.NET.

- It can be configured to behave as a Source, a Destination, or any type of Transformation.

6. Other Transformations –

Add Audit Information such as when the package was run and by whom.

- Export and Import Data.

- Stores the row count from the Data Flow into a variable

| Transformation Name | Description |

| Audit | Adds audit information as columns to the output. |

| Cache Transform | Prepares caches for use by the Lookup transformation. |

| Export and Import Column | Extracts or load data from/to the file system. |

| Row Count | Stores the number of rows that have passed through the transformation into a variable. |

| Slowly Changing Dimensions | Produces SCD logic for type 1 and 2 changes. |

This completes the basic of Data Flow Transformation Categories in SSIS. You can have as manytransformations as you need according to your business requirement with the use of Script Transformation. This feature of creating custom transformations via VB.NET or C# programming language, makes it a valuable tool in this competeting market of Business Intelligence. Further we will do the implementation of each Data Flow Transformation. We will see how it is configured to work according to our requirements.

Aggregate Transformation in SSIS:

Aim : This post is specifically for those geeks who wants to learn about SSIS Aggregate Transformation. Microsoft provides a vast list of Data Flow transformations in SSIS, and this (aggregate transformation) is one of the most used transformations in SSIS.

Description : Aggregate transformation is a blocked and Asynchronous transformation. WithAsynchronous, I mean to say that Output rows will not be equal to the Input rows. Also, this is categorized as Blocked transformation because aggregation is performed on all the column values. So, it will not release any row until it processes all rows. For more information on Dataflow transformation categories and examples, visit this – Dataflow transformations in SSIS

It will work in the same way as the aggregate functions perform in the SQL server. Generally, we have different types of Aggregate functions (MIN, MAX, SUM, AVG, COUNT and COUNTDISTINCT). The aggregate transformation also provides GROUP BY clause, which you can use to specify groups to aggregate across.

Now we are going to learn the functionality of each function in detail. You can visit, Source – Microsoft’s website to learn more on this.

- SUM: Used as SUM(). This function Sums up the values present in a column. Only columns with numeric data types can be summed.

- AVG: Used as AVG(). This function Returns the average of all the column values present in a column. Only columns with numeric data types can be averaged.

- MAX: Used as MAX(). Returns the maximum value present in a group. In contrast to the Transact-SQL MAX function, this operation can be used only with numeric, date, and time data types.

- MIN: Used as MIN(). Returns the minimum value present in a group. In contrast to the Transact-SQL MIN function, this operation can be used only with numeric, date, and time data types.

- COUNT: Used as COUNT(). Returns the number of items present in a group.

- COUNT DISTINCT:Returns the number of unique nonnull values present in a group.

- GROUP BY: Used as GROUP BY(). Divides data sets into groups. Columns of any data type can be used for grouping.

The SSIS Aggregate transformation will handle null values in the same way as the SQL Server relational database engine handles. The behavior is defined in the SQL-92 standard. Following are the rules which are applied:

- In a GROUP BY clause, nulls are treated like other column values. If the grouping column contains more than one null value, the null values are put into a single group.

- In the COUNT (column name) and COUNT (DISTINCT column name) functions, nulls are ignored and the result excludes rows that contain null values in the named column.

- In the COUNT (*) function, all rows are counted, including rows with null values.

I am sure this information provided by Microsoft must have helped you to clear your basics. Let’s go ahead to my favorite session i.e. Step by Step Example to show working of Aggregate transformation in SSIS.

Step by Step example on SSIS Aggregate transformation

Step 1. SSIS Package Configuration.

- Create a SSIS package with name “Aggregate”.

- In your SQL Server Data tool (abbreviated as SSDT is known as BIDS of 2012), drag and drop Data Flow Task (DFT) to control flow window.

- Double click on Data Flow Task. It will take you to the Data flow tab where you can perform yourETL functions i.e. E – Extract data from heterogeneous source, T – Transform it with Data flow transformations and L – Load transformed data to Data warehouse.

Step 2. OLEDB Source Configuration.

- Drag and drop OLEDB source to Data flow window.

- Now, configure OLEDB source as per your settings. For this example, I am creating a connection manager to OLEDB source. I have given server name as (local). I am choosing database as AdventureWorks2008. Add this connection manager to OLEDB source. I am selecting a table named [Sales].[SalesOrderDetail].

- Here, I have not shown you the configuration steps of OLEDB source. I hope you all are very familiar with it. Trust me it is as simple as shopping from flipkart.

Step 3. Aggregate Dataflow Transformation Configuration.

- Drag and drop Aggregate transformation to Dataflow window.

- Create a connection between OLEDB source and Aggregate transformation. To create connection, hold path (green arrow) from OLEDB source and drag it onto Aggregate transformation.

- To configure Aggregate transformation, double click on it. An Aggregate transformation editor window will pop up asking for settings.

- Select the required Columns and choose appropriate Operation on that selected column.

- In this example, I am going to find total rows in the table by using COUNT function and maximummodified date by using MAX function, the sum of unit price by using SUM function and the minimumline total by using MIN function.

- Below screen shot states that I have implemented all the mentioned operations in my aggregate transformation.

- Finally click on OK button to confirm the end of configuration for “SSIS Aggregate Transformation”.

Step 4. OLEDB Destination Configuration.

- Drag and drop OLEDB destination to the Dataflow window.

- Create a connection between Aggregate transformation and OLEDB destination.

- Configure OLEDB destination as per your settings.

- I am attaching Data viewer to view the output while executing the SSI package.

- Finally it will look like picture shown below.

Note :- Create a “Data Viewer” between Aggregate transformation and OLEDB destination for observing the flow of data as well as Output data too.

Kudos!!! We are done with the creation of SSIS package which will perform aggregate transformation on data. Now, I am going to run our SSIS package to view the results.

Conclusion – By observing about screen shot, we can conclude our SSIS aggregate transformationoperations on given table ([Sales].[SalesOrderDetails]) is performed successfully. Final output is – One single row with Four columns. Below is the complete analysis of this result.

- First column tells us the total count of rows in given table.

- Second column is showing the maximum Modified date.

- Third column is showing the sum of Unit price.

- Fourth column gives the minimum Line total.

How to load multiple Excel sheets data into one Excel workbook

Aim :- What you are going to Learn is – How to load multiple excel sheets data? The core reason of putting this scenario in Question Laboratory (Q Lab) is that we will show you how to load multiple excelsheets data into a single excel work book without using Foreach loop container in SSIS.

Description :- I have seen many notifications on Facebook’s MSBI groups regarding this question i.e.How we can load multiple excel sheets data into one excel workbook in SSIS? This question was asked by one guy who faced this scenario in a SSIS Interview. My direct answer was SSIS Foreach Loop Container. But there was a catch in this question. The catch was we have to load the data without using SSIS Foreach loop container.

To be frank I was not aware about the answer for this question and I never faced this scenario before too. I found this post interesting and followed my other passionate members comment. One guy in that group answered this question in a very simple way. Then, I tried it at home and I learned the answer from his comment. So, I thought to make this interesting SSIS Interview question as an article and share with you guys from PhpRing (A Ring of People Helping People) platform.

Steps to Load multiple Excel sheets data into Single Excel workbook without using Foreach Loop container in SSIS

Step 1 : Create Excel workbook with 3 sheets.

- Create one excel workbook and fill some dummy data in 3 sheets as the below image.

NOTE – Make sure that the format of the data in 3 sheets will be same.

Step 2 : Create SSIS package to load Data from Excel

- Create one SSIS (SQL Server Integration Services) package and name it as say “Load excel sheets”.

- Drag & drop DFT (Data Flow Task) to the control flow pane.

- Double click on DFT. Now, Drag and drop excel source component from SSIS toolbox to the data flow pane.

- Double click on Excel source to configure it.

- Once we click on Excel source, we will see the below screen shot.

- In the above picture, I have created a connection to my source (Excel connection Manager as my source is Excel).

- Then, Select SQL command as Data access mode.

- Write below SQL query (copy and paste the same) in SQL command text area.

- Click on Parse query to test the syntactical errors.

- Finally click on OK button (If everything works good).

Step 3 : SSIS package execution to load multiple excel data

- Instead of configuring destination, I am taking Derived column transformation as temporary destination to show the output.

- Create a connection between Data flow task (DFT) and Derived column transformation (Temporary destination).

- I am enabling the Data viewer to see the flow of the data between Excel source and Derived column.

- Your SSIS package should look like below image.

- Finally execute SSIS package to load multiple excel sheets data into one excel workbook.

- Once you executed the SSIS package, it will look like below image. Green color indicates that package executed successfully and Red color will mean that you have a bad day.

- In the below screen shot, we can observe that the data from three excel sheets have been readand it has been processed successfully to the next level. This means we achieved our target i.e. To load multiple excel sheets data into one excel workbook.

NOTE – I will only suggest this process, when we have limited number of excel sheets. But, if we have to load more number of excel sheets into a single work book then it will be bit difficult. The reason for this is that we need to write more select statements (equal to number of sheets). So this will make the situation complex for the BI developer.

Sort Transformation in SSIS

Aim :- In this, we will implement Sort Transformation in SSIS. In our earlier SSIS Tutorials, we discussed Control flow in SSIS. But, with this post we will now move forward to Data flow in SSIS. Sort Transformation is present in Data Flow tab. We will now learn the functionality of Sort transformation in SSIS.

Description :- Sort can be defined as any process of arranging items or data according to a certain sequence. Sort transformation in SSIS works on the same principle. Sort transformation in SSIS is used to “Sort” the data either ascending or descending order. Sort transformation in SSIS is mainly used with Merge or Merge Join Transformations. We can call Sort transformation as Asynchronous Transformation and Blocked Transformation. Are you wondering what is Asynchronous and Blocked transformations? We have a solution right below this.

What is a Blocked Transformation?

Blocked Transformation means – The next operation can’t start until & unless it completes theprevious operation. In this, rows will not be send to next transformations until all of the Input rows have been read. In simple words, they will process all rows first and than release them for further Transforamtions.

How it works?

- First it read the all the Data from Source.

- Once it read the all the data from Source then it performs Sort Transforamtion (Asc or Desc) based upon condition.

- Finally it loads the Data into destination.

I think this explanation is enough to understand the concept of Blocked transformations. Now we are good to learn Sort Transformation in SSIS. Let’s work on Sort transformation in SSIS with a simple example.

STEP 1. Source Data :-

Below is a file containing my source data. It contains data with few duplicate rows as well.

STEP 2. Business Intelligence Development Studio – BIDS :-

- Open BIDS, Create one SSIS project. Here my Project name is PHPRING.

- In this project, create one Package and name it as say “Sort”.

- Drag and drop one Data Flow Task (DFT) to Control Flow.

- Double click on Dataflow Task. Then drag and drop – Flatfile Source, Sort transformationand OLEDB Destination and connect them all.

- Here, my source is in File format- That’s why I am taking source as Flatfile. And I want to load my Source data into SQL table – That’s why I am taking destination as oledb.

Follow below screen shot if you have any doubt while taking Source, Sort transformation and Destination.

STEP 3. Configuring Flatfile Source :-

Double click on Flatfile source to set properties. Once you double click on Flat file source, the below wizard will appear. Click on New button

Once we click on “New” we will get another window. Finally click on “OK” button.

STEP 4. Configuring Sort Transformation in SSIS :-

It’s time to Configure Sort transformation in SSIS. Double clicking on Sort transformation will pop up the below window. Now, set the properties like done below.

- Check column “name” in the available columns.

- Give Sort order as Ascending.

- Check the box below ( If you want to Remove rows with duplicate sort values).

- Finally click on “OK” button.

So, till here we complete the configuration of Flatfile Source and Sort transformation in SSIS. Finally, we can complete this with the configuration of OLEDB Destination.

STEP 5. Configuring OLEDB Destination :-

Double click on OLEDB Destination. The below window will appear on our Screen.

Clicking on “New” button will get another window.

Once again click on “New” button. We will get another window. Follow the below screen shot.

Finally click on “OK” button. So guys I configured everything, it’s time to run our package and observe the output.

STEP 6. Executing our Sort Transformation in SSIS Package :-

In this screen shot we can clearly observe that 12 rows are coming from Source. But only 8 rowsare loaded into SQL table “Sort”.

Between Source and Destination, we have Sort Transformation. So it eliminates all the duplicate rows as we check the box above in STEP 4. That’s why we got only 8 rows as Output.

Now, I am switching to my MS Sql Server to check table (sort). My output data is stored in this SQL table. In the below screen shot, we can clearly observe the output (8 rows). Also, we can see the Sorted column “ename” in Ascending order.

STEP 7. Performance issues with Sort transformation in SSIS :-

- It is a blocked transformation. I already mentioned few points at the starting of this article.

- It always degrades the performance So it is very bad to Implement.

- When a blocking transformation is encountered in the Data flow, a new buffer is created for its output and a new thread is introduced into the Data flow.

- Instead of using Sort transformation in SSIS, It is better to Sort out data with Tsql (Order by clause).

Learn SSIS Derived Column Transformation

Aim :- Earlier tutorials were targeted to provide insight on various transformations in SSIS. Continuing with the same approach, today we are going to learn one more SSIS Transformation named as Derived Column Transformation. We fill follow our traditional method i.e. Learning things via doing them practically. This always gives a better vision of the concept.

Description :- Read this loudly – SSIS Derived Column Transformation. The name itself indicates that it is going to derive something from column(s). If this thing came to your mind then you hit the bulls eye. It will create a new column or replace existing column by writing an expression in Derived column editor. The expression may contain functions like Number, string, date, etc. and also we can use the variables.

Let’s understand this with an example – Suppose, we have three input columns like First_name, Middle_name and Last_name. Finally, I want only one column as an output column i.e. Full name. We can achieve this by concatenating the three input columns (First_name, Middle_name, Last_name).

But now the problem is how we can concatenate these three input columns. So, the solution to this problem is Expressions i.e. we can write a following expression (Expression starts with equal (=) sign):- =First_name+” ”+Middle_name+” ”+Last_name

NOTE – In above Expression, we are using “+” symbol for concatenation.

Recently someone asked us one question on Facebook which is quoted as below –

QUESTION –

Hi Everyone, Can you suggest me, if I have an input file like –

Input file :-

Id Genderid

1 1

2 2

3 3

Id Genderid

1 1

2 2

3 3

Then, how can we show an output like below?

Output file :-

Id GenderId Gender_Name

1 1 Male

2 2 Female

3 3 Unknown

Id GenderId Gender_Name

1 1 Male

2 2 Female

3 3 Unknown

Let us make this question clearer (Question modified a little bit for more understanding) –

While moving the input data into a table, user wants a new column (i.e. Gender_name) by referencing to the column “GenderID” which means –

- “1” as Male,

- “2” as “Female”, and

- “3” as “Unknown”

ANSWER –

SELECT Id, GenderId,

newcol =

CASE

WHEN GenderId=1 THEN ‘Male’

WHEN GenderId=2 THEN ‘Female’

ELSE ‘Unknown’

END

FROM <Tablename>

newcol =

CASE

WHEN GenderId=1 THEN ‘Male’

WHEN GenderId=2 THEN ‘Female’

ELSE ‘Unknown’

END

FROM <Tablename>

We will answer this question in two ways –

Scenario 1:- Here, I will show how we can achieve the output with SQL Server.

Scenario 2:- Here, I will show how we can achieve the same output via SSIS (By using SSIS Derived column transformation)

Before we begin our in depth study on 2 above scenarios, firstly let’s create a table.

================= Create Query to create Test table ====================

Use PhPRing

Go

Go

Create table Test

(Id varchar(10),

GenderId varchar(10))

Go

================ Insert Query to insert data into Test table =================

(Id varchar(10),

GenderId varchar(10))

Go

================ Insert Query to insert data into Test table =================

Insert into test values (‘1′,’1′),(‘2′,’2′),(‘3′,’3′)

Go

Go

Scenario 1:- With SQL server – By using CASE statement

Input Query –

SELECT Id,

GenderId,

Gender_Name=CASE

WHEN GenderId = 1 THEN‘Male’

WHEN GenderId = 2 THEN‘Female’

ELSE‘Unknown’

END

FROM Test

GenderId,

Gender_Name=CASE

WHEN GenderId = 1 THEN‘Male’

WHEN GenderId = 2 THEN‘Female’

ELSE‘Unknown’

END

FROM Test

Output –

Scenario 1 – With CASE statement in MS SQL Server

Scenario 2:- With SQL Server integration Services – By using SSIS Derived column transformation

- Create one SSIS package and give any name, say “Generate_Newcol”.

- Drag and drop Data Flow Task (DFT).

- Double click on Data Flow Task. Now, drag and drop OLEDB source and create connection to your database say, PhpRing in this example. (I hope you all know how to create a connection to the database).

- Now, drag and drop SSIS Derived Column Transformation and create a connection between OLEDB sources to Derived column.

- Double click on Derived Column Transformation. Finally it will look like below image.

Note down the things which needs to be done in the above Derived column Transformation editor.

- Expand columns.

- Provide Derived column name, say = Gender_Name.

- Derived column column = <add new column>.

- Write an Expression as = [GenderId]==”1″?”Male”: [GenderId]==”2″?”Female”:”Unknown”

Explanation about the expression – This expression will also work similar like CASE statement/IF statement. I hope you all know how IF works in reality. In case you are not sure, refer below line to understand the functionality of IF statement.

“If something equal to some value, do like this else do like other”.

Below are the Things to remember when writing an expression in SSIS Derived Column transformation.

- If we are going to equal any value then we must use two equal symbols i.e. “==”.

- If we equal any string fields, we must enclose them within double quotes i.e. “”.

- If there is anything wrong with your expression then the expression will be highlighted in Red color and we all very well know that red color indicates an error.

- If everything is right with your expression then the expression will be highlighted in black color.

- If you are writing an expression in editor and are using any columns then, just drag & drop those columns in your expression editor. This will avoid manual type errors such as column names mismatch, etc. As, Column names are case sensitive so expression may not be valid if column names are not written exactly with proper casing.

This is all about the two scenarios. Now, I am moving ahead and will execute my SSIS Derived Column Transformation package. Let’s see what will happen now.

Scenarion 2 – With Derived Column Transformation in SSIS

We can see in the above image that column Gender_Name is created and the result set is exactly similar to Scenario 1 result.

SSIS 2012

Final Output

As we can see in snapshot the Null values are replaced by "Unknown" for SalePersonName and with 0 for SaleAmount

SSIS -How To Use Derived Column Transformation [Replace Null Values]

Scenario:

Let’s say we are extracting data from flat file or from Database table. Few of the columns have Null values. In our destination we do not want to insert these values as NULL. We want to replace Null values with “Unknown” for character type columns and 0 ( Zero) for integer type columns.

Solution:

If we are extracting data from Database table then we can use IsNULL function and replace with required values but If we are extracting data from flat file then we cannot use this function and we have to use some transformation in SSIS to perform this operation.

In derived column transformation, there are different types of functions and operators are available those can help us to achieve this required results

- Mathematical Function

- String Functions

- Date/Time Functions

- Null Functions

- Type Casts Functions

- Operators

Here are the steps how we will be replacing Null values by using Derived column Transformation

Step 1:

Create connection to your flat file by using Flat File Source. I have used below data in text file for this example.Notice SaleAmount for Italy,andy is missing and SalePerson is missing for Brazil,,200

CountryName,SalePersonName,SaleAmount

uSA,aamir shahzad,100

Italy,andy,

UsA,Mike,500

brazil,Sara,1000

INdia,Neha,200

Brazil,,200

Mexico,Anthony,500

Step 2:

Bring Derived Column Transformation in Data Flow Pane and then connect Flat File Source to it. By double clicking derived column transformation, write expressions as shown below for SSIS 2008 and SSIS 2012.

SSIS 2012 introduced new function REPLACENULL that we can use and get the required results but in previous versions of SSIS, we have to use if else expressions with ISNULL Function to achieve this.

How you will read this expression

ISNULL(SalePersonName) ? "Unknown" : SalePersonName

IF (?) SalePersonName is Null then “Unknown” Else (:) Column Value itself( SalePersonName)

SSIS 2008 Derived Column Expression

Lookup Transformation in SSIS:

a SQL Server Integration Services (SSIS) package that you want to perform a lookup in order to supplement or validate the data in your data flow. A lookup lets you access data related to your current dataset without having to create a special structure to support that access.

To facilitate the ability to perform lookups, SSIS includes the Lookup transformation, which provides the mechanism necessary to access and retrieve data from a secondary dataset. The transformation works by joining the primary dataset (the input data) to the secondary dataset (the referenced data). SSIS attempts to perform an equi-join based on one or more matching columns in the input and referenced datasets, just like you would join two tables in in a SQL Server database.

Because SSIS uses an equi-join, each row of the input dataset must match at least one row in the referenced dataset. The rows are considered matching if the values in the joined columns are equal. By default, if an input row cannot be joined to a referenced row, the Lookup transformation treats the row as an error. However, you can override the default behavior by configuring the transformation to instead redirect any rows without a match to a specific output. If an input row matches multiple rows in the referenced dataset, the transformation uses only the first row. The way in which the other rows are treated depends on how the transformation is configured.

The Lookup transformation lets you access a referenced dataset either through an OLE DB connection manager or through a Cache connection manager. The Cache connection manager accesses the dataset held in an in-memory cache store throughout the duration of the package execution. You can also persist the cache to a cache file (.caw) so it can be available to multiple packages or be deployed to several computers.

The best way to understand how the Lookup transformation works is to see it in action. In this article, we’ll work through an example that retrieves employee data from the AdventureWorks2008R2 sample database and loads it into two comma-delimited text files. The database is located on a local instance of SQL Server 2008 R2. The referenced dataset that will be used by the Lookup transformation is also based on data from that database, but stored in a cache file at the onset of the package execution.

The first step, then, in getting this example underway is to set up a new SSIS package in Business Intelligence Development Studio (BIDS), add two Data Flow tasks to the control flow, and connect the precedence constraint from the first Data Flow task to the second Data Flow task, as I’ve done in Figure 1.

Figure 1: Adding data flow components to your SSIS package

Notice that I’ve named the fist Data Flow task Load data into cache and the second one Load data into file. These names should make it clear what purpose each task serves. The Data Flow tasks are also the only two control flow components we need to add to our package. Everything else is at the data flow level. So let’s get started.

Writing Data to a Cache

Because we’re creating a lookup based on cached data, our initial step is to configure the first data flow to retrieve the data we need from the AdventureWorks2008R2 database and save it to a cache file. Figure 2 shows what the data flow should look like after the data flow has been configured to cache the data. As you can see, you need to include only an OLE DB source and a Cache transformation.

Figure 2: Configuring the data flow that loads data into a cache

Before I configured the OLE DB source, I created an OLE DB connection manager to connect to theAdventureWorks2008R2 database on my local instance of SQL Server. I named the connection managerAdventureWorks2008R2.

I then configured the OLE DB source to connect to the AdventureWorks2008R2 connection manager and to use the following T-SQL statement to retrieve the data to be cached:

USE AdventureWorks2008R2;

GO

SELECT

BusinessEntityID,

NationalIDNumber

FROM

HumanResources.Employee

WHERE

BusinessEntityID < 250;

GO

SELECT

BusinessEntityID,

NationalIDNumber

FROM

HumanResources.Employee

WHERE

BusinessEntityID < 250;

Notice that I’m retrieving a subset of data from the HumanResources.Employee table. The returned dataset includes two columns: BusinessEntityID and NationalIDNumber. We will use the BusinessEntityID column to match rows with the input dataset in order to return a NationalIDNumber value for each employee. Figure 3 shows what your OLE DB Source editor should look like after you’ve configured it with the connection manager and T-SQL statement.

Figure 3: Using an OLE DB source to retrieve data from the AdventureWorks2008R2 database

You can view a sample of the data that will be cached by clicking the Preview button in the OLE DB Source editor. This launches the Preview Query Results dialog box, shown in Figure 4, which will display up to 200 rows of your dataset. Notice that a NationalIDNumber value is associated with each BusinessEntityID value. The two values combined will provide the cached data necessary to create a lookup in your data flow.

Figure 4: Previewing the data to be saved to a cache

After I configured the OLE DB source, I moved on to the Cache transformation. As part of the process of setting up the transformation, I first configured a Cache connection manager. To do so, I opened the Cache Transformationeditor and clicked the New button next to the Cacheconnection manager drop-down list. This launched the Cache Connection Manager editor, shown in Figure 5.

Figure 5: Adding a Cache connection manager to your SSIS package

I named the Cache connection manager NationalIdCache, provided a description, and selected the Use File Cache checkbox so my cache would be saved to a file. This, of course, isn’t necessary for a simple example like this, but having the ability to save the cache to a file is an important feature of the SSIS lookup operation, so that’s why I’ve decided to demonstrate it here.

Next, I provided and path and file name for the .caw file, and then selected the Columns tab in the Cache Connection Manager editor, which is shown in Figure 6.

Figure 6: Configuring the column properties in your Cache connection manager

Because I created my Cache connection manager from within the Cache transformation, the column information was already configured on the Columns tab. However, I had to change the Index Position value for theBusinessEntityID column from 0 to 1. This column is an index column, which means it must be assigned a positive integer. If there are more than one index columns, those integers should be sequential, with the column having the most unique values being the lowest. In this case, there is only one index column, so I need only assign one value. The NationalIDNumber is a non-index column and as such should be configured with an IndexPosition value of 0, the default value.

When a Cache connection manager is used in conjunction with a Lookup transformation, as we’ll be doing later in this example, the index column (or columns) is the one that is mapped to the corresponding column in the input dataset. Only index columns in the referenced dataset can be mapped to columns in the input dataset.

After I set up the Cache connection manager, I configured the Cache transformation. First, I confirmed that the Cacheconnection manager I just created is the one specified in the Cache connection manager drop-down list on theConnection Manager page of the Cache Transformation editor, as shown in Figure 7.

Figure 7: Setting up the Cache transformation in your data flow

Next, I confirmed the column mappings on the Mappings page of the Cache Transformation editor. Given that I hadn’t changed any column names along with way, these mappings should have been done automatically and appear as they do in Figure 8.

Figure 8: Mapping columns in the Cache transformation

That’s all there is to configuring the first data flow to cache the referenced data. I confirmed that everything was running properly by executing only this data flow and then confirming that the .caw file had been created in its designated folder. We can now move on to the second data flow.

Performing Lookups from Cached Data

The second data flow is the one in which we perform the actual lookup. We will once again retrieve employee data from the AdventureWorks2008R2 database, look up the national ID for each employee (and adding it to the data flow), and save the data to one of two files: the first for employees who have an associated national ID and the second file for those who don’t. Figure 9 shows you what your data flow should look like once you’ve added all the components.

Figure 9: Configuring the data flow to load data into text files

The first step I took in setting up this data flow was to add an OLE DB source and configure it to connect to theAdventureWorks2008R2 database via to the AdventureWorks2008R2 connection manager. I then specified that the source component run the following T-SQL statement in order to retrieve the necessary employee data:

SELECT

BusinessEntityID,

FirstName,

LastName,

JobTitleFROM

HumanResources.vEmployee;

BusinessEntityID,

FirstName,

LastName,

JobTitleFROM

HumanResources.vEmployee;

The data returned by this statement represents the input dataset that will be used for our lookup operation. Notice that the dataset includes the BusinessEntityID column, which will be used to map this dataset to the referenced dataset. Figure 10 shows you what the Connection Manager page of the OLE DB Source editor should look like after you’ve configured that connection manager and query.

Figure 10: Configuring an OLE DB source to retrieve employee data

As you did with the OLE DB source in the first data flow, you can preview the data returned by the SELECTstatement by clicking the Preview button. Your results should look similar to those shown in Figure 11.

Figure 11: Previewing the employee data returned by the OLE DB source

My next step was to add a Lookup transformation to the data flow. The transformation will attempt to match the input data to the referenced data saved to cache. When you configure the transformation you can choose the cache mode and connection type. You have three options for configuring the cache mode:

- Full cache: The referenced dataset is generated and loaded into cache before the Lookup transformation is executed.

- Partial cache: The referenced dataset is generated when the Lookup transformation is executed, and the dataset is loaded into cache.

- No cache: The referenced dataset is generated when the Lookup transformation is executed, but no data is loaded into cache.

For this exercise, I selected the first option because I am generating and loading the data into cache before I run theLookup transformation. Figure 12 shows the General page of the Lookup Transformation editor, with the Full Cache option selected.

Figure 12: Configuring the General page of the Lookup Transformation editor

Notice that the editor also includes the Connection type section, which supports two options: Cache Connection Manager and OLE DB Connection Manager. In this case, I selected the Cache Connection Manager option because I will be retrieving data from a cache file, and this connection manager type is required to access the data in that file.

As you can see in Figure 12, you can also choose an option from the drop-down list Specify how to handle rows with no matching entries. This option determines how rows in the input dataset are treated if there are no matching rows in the referenced database. By default, the unmatched rows are treated as errors. However, I selected the Redirect rows to no match output option so I could better control the unmatched rows, as you’ll see in a bit.

After I configured the General page of the Lookup Transformation editor, I moved on to the Connection page and ensured that the Cache connection manager named NationalIdCache was selected in the Cache Connection Manager drop-down list. This is the same connection manager I used in the first data flow to save the dataset to a cache file. Figure 13 shows the Connection page of the Lookup Transformation editor with the specified Cache connection manager.

Figure 13: Configuring the Connection page of the Lookup Transformation editor

Next, I configured the Columns page of the Lookup Transformation editor, shown in Figure 14. I first mapped theBusinessEntityID input column to the BusinessEntityID lookup column by dragging the input column to the lookup column. This process created the black arrow between the tables that you see in the figure. As a result, theBusinessEntityID columns will be the ones used to form the join between the input and referenced datasets.

Figure 14: Configuring the Columns page of the Lookup Transformation editor

Next, I selected the checkbox next to the NationalIDNumber column in the lookup table to indicate that this was the column that contained the lookup values I wanted to add to the data flow. I then ensured that the lookup operation defined near the bottom of the Columns page indicated that a new column would be added as a result of the lookup operation. The Columns page of your Lookup Transformation editor should end up looking similar to Figure 14.

My next step was to add a Flat File destination to the data flow. When I connected the data path from the Lookuptransformation to the Flat File destination, the Input Output Selection dialog box appeared, as shown in Figure 15. The dialog box let’s you chose which data flow output to send to the flat file—the matched rows or the unmatched rows. In this case, I went with the default, Lookup Match Output, which refers to the matched rows.

Figure 15: Selecting an output for the Lookup transformation

Next, I opened the Flat File Destination editor and clicked the New button next to the Flat File ConnectionManager drop-down list. This launched the Flat File Connection Manager editor, shown in Figure 16. I typed the name MatchingRows in the Connection manager name text box, typed the file nameC:\DataFiles\MatchingRows.txt in the File name text box, and left all other setting with their default values.

Figure 16: Setting up a Flat File connection manager

After I saved my connection manager settings, I was returned to the Connection Manager page of the Flat File Destination editor. The MatchingRows connection manager was now displayed in the Flat File ConnectionManager drop-down list, as shown in Figure 17.

Figure 17: Configuring a Flat File destination in your data flow

I then selected the Mappings page (shown in Figure 18) to verify that the columns were properly mapped between the data flow and the file destination. One thing you’ll notice at this point is that the data flow now includes theNationalIDNumber column, which was added to the data flow by the Lookup transformation.

Figure 18: Verifying column mappings in your Flat File destination

The next step I took in configuring the data flow was to add a second Flat File destination and connect the second data path from the Lookup transformation to the new destination. I then configured a second connection manager with the name NonMatchingRows and the file name C:\DataFiles\NonMatchingRows.txt. All rows in the input data that do not match rows in the referenced data will be directed to this file. Refer back to Figure 9 to see what your data flow should look like at this point.

Running Your SSIS Package

The final step, of course, is to run the SSIS package in BIDS. When I ran the package, I watched the second data flow so I could monitor how many rows matched the lookup dataset and how many did not. Figure 19 shows the data flow right after I ran the package.

Figure 19: Running your SSIS package

In this case, 249 rows in the input dataset matched rows in the referenced dataset, and 41 rows did not. These are the numbers I would have expected based on my source data. I also confirmed that both text files had been created and that they contained the expected data.

As you can see, the Lookup transformation makes it relatively easy to access referenced data in order to supplement the data flow. For this example, I retrieved my referenced data from the same database as I retrieved the input data. However, that referenced data could have come from any source whose data could be saved to cache, or it could have come directly from another database through an OLE DB connection. Once you understand the principles behind the Lookup transformation, you can apply them to your particular situation in order to create an effective lookup operation.

Lookup, Cache Transformation & Cache Connection

Cache Connection Manager

- This connection manager is to define the cache

- Cache will be stored in a memory, however we can create cache file to hold the data.

- Once we feed the data into that file (*.caw) , later in all data flow tasks, we can use connection manager and get the data from that cache file

Cache Transformation

- This is one of the Data flow transformation

- It uses memory cache / file cache as configured in Cache Connection Manager and pulls data from there

- This is a very helpful transformation in terms of performance improvement

Lookup Transformation

- Basically it is being used to lookup the data

- It is using equi-join.

- All the values from source table (joining column) SHOULD exist in Reference table. If not, it will throw an

error

- NULL value also will be considered as non-matching row

- This is CASE-SENSITIVE.

- It can internally use Cache Connection / OLEDB Connection.

- There can be 3 cache types

(A) Full Cache

- Cache connection manager can be used only with this type of cache

- In this case, when package starts, data will be pulled and kept into memory cache / file cache

and later only cache will be used and not database

- We might not get the latest data from database

(B) Partial Cache

- Cache connection manager can't be used with this

- "Advance" tab of Lookup transformation will be enabled only in case of Partial cache where one

can configure cache size

- This is bit different than Full cache

- Initially cache will be empty. For each value, first it checks in Cache, if not found then goes to

database, if found from database, then stores that value in cache so it can be used in later stage.

- Startup time will be less than Full cache but processing time will be longer

- Also lot of available memory should be there.

(C) No Cache

- Every time, it will get it from database

- There are 3 outputs

(A) Matching Row

(B) No Matching Row

(C) Error

EXAMPLE

Pre-requisite

Execute the following query in database

Steps

1. Right click in Connection Tray and click on "New Connection"

2. select "CACHE" and click on Add

3. Check "Use File Cache" checkbox to store the cache data in a file.

4. select the file destination and give some name

5. Click on "columns" tab and start adding columns.

6. Add 2 columns (Country , Region) with IndexPosition 1 and 0 respectively.

Index Position 1 : this will be a joining key on which we will use joining conditions

Index Position 0 : Other columns

7. Drag data flow task and rename as "Build Cache"

8. Go inside DFT and drag OLEDB source which should refer to LookupDemoReference table.

9. Drag Cache Transformation and connect it with source

10. In Connection Manager, select created cache connection manager

11. click on mapping and make sure, you have proper mapping between columns.

12. Go back to control flow and drag one more data flow task and rename it to "Lookup Data"

13. Connect 1st DFT to 2nd DFT. It should look like this.

14. Go to Lookup Data and add OLEDB source which should point to LookupDemo table

15. Drag Lookup Transformation and connect it with source

16. Select "Full Cache" in General tab of Lookup Transformation. Select "Cache Connection"

17. Select created connection manager and in Connection tab

18. Do column mappings as shown below.

19. Drag OLEDB destination and connect it with Lookup Transformation. make sure to choose "Lookup Match output"

20. OLEDB destination should point to LookupDemoOutput table

20. Column mapping should be like this.

21. Now execute the package.

22. Package will be failed at lookup transformation

23. Reason of failure.

- Source table has one record with INDIA which is not available in Reference table, this signifies that

Lookup transformation is CASE-SENSITIVE

- Lookup Transformation is different then Merge Join here. Merge join will ignore the rows if they are not

matching, but Lookup will throw an error.

24a. Open Lookup Transformation and change settings like this.

24b. Now drag Derived Column Transformation and connect it with Lookup Transformation. Make sure Lookup No Match Output gets displayed on the arrow.

25. In Derived Column, add a new column like this. Make sure to cast it into DT_STR

26. Remove the connection between OLEDB Destination and Lookup Transformation.

26. Now drag "Union All Transformation" and connect it with Lookup Transformation. Make sure Lookup Match Output gets displayed on the arrow.

27. Select "Invalid Column" in last row, last column

28. Connect Union All transformation to OLEDB destination

29. Whole DFT will look like this.

30. Execute the package.

31. We can see that 1 rows has been redirected as "No match output" and finally using Union All it is reaching to destination.

32. Result table is looking like this.

33. This signifies following things.

- Lookup Transformation is Case-sensitive

- If 2 reference records are existing then Lookup Trans. picks up only 1st record.

- Lookup Trans. fails if joining key column is not in reference table in case we haven't configure No

Match output.

- This connection manager is to define the cache

- Cache will be stored in a memory, however we can create cache file to hold the data.

- Once we feed the data into that file (*.caw) , later in all data flow tasks, we can use connection manager and get the data from that cache file

Cache Transformation

- This is one of the Data flow transformation

- It uses memory cache / file cache as configured in Cache Connection Manager and pulls data from there

- This is a very helpful transformation in terms of performance improvement

Lookup Transformation

- Basically it is being used to lookup the data

- It is using equi-join.

- All the values from source table (joining column) SHOULD exist in Reference table. If not, it will throw an

error

- NULL value also will be considered as non-matching row

- This is CASE-SENSITIVE.

- It can internally use Cache Connection / OLEDB Connection.

- There can be 3 cache types

(A) Full Cache

- Cache connection manager can be used only with this type of cache

- In this case, when package starts, data will be pulled and kept into memory cache / file cache

and later only cache will be used and not database

- We might not get the latest data from database

(B) Partial Cache

- Cache connection manager can't be used with this

- "Advance" tab of Lookup transformation will be enabled only in case of Partial cache where one

can configure cache size

- This is bit different than Full cache

- Initially cache will be empty. For each value, first it checks in Cache, if not found then goes to

database, if found from database, then stores that value in cache so it can be used in later stage.

- Startup time will be less than Full cache but processing time will be longer

- Also lot of available memory should be there.

(C) No Cache

- Every time, it will get it from database

- There are 3 outputs

(A) Matching Row

(B) No Matching Row

(C) Error

EXAMPLE

Pre-requisite

Execute the following query in database

CREATE TABLE LookupDemo (EID int, EName varchar(10), CountryVARCHAR(10))

CREATE TABLE LookupDemoReference (Country VARCHAR(10), RegionVARCHAR(15))

CREATE TABLE LookupDemoOutput (EID int, EName varchar(10), CountryVARCHAR(10), Region VARCHAR(15))

INSERT INTO LookupDemo (EID,EName,Country) VALUES

(1,'Nisarg','India'),

(2,'Megha','INDIA'),

(3,'Swara','China'),

(4,'Nidhi','USA'),

(5,'Lalu','Japan')

INSERT INTO LookupDemoReference (Country, Region) VALUES

('India','ASIA'),

('India','ASIA-Pacific'),

('China','ASIA-Pacific'),

('USA','North America'),

('Japan','ASIA-Pacific')

Steps

1. Right click in Connection Tray and click on "New Connection"

2. select "CACHE" and click on Add

3. Check "Use File Cache" checkbox to store the cache data in a file.

4. select the file destination and give some name

5. Click on "columns" tab and start adding columns.

6. Add 2 columns (Country , Region) with IndexPosition 1 and 0 respectively.

Index Position 1 : this will be a joining key on which we will use joining conditions

Index Position 0 : Other columns

7. Drag data flow task and rename as "Build Cache"

8. Go inside DFT and drag OLEDB source which should refer to LookupDemoReference table.

9. Drag Cache Transformation and connect it with source

10. In Connection Manager, select created cache connection manager

11. click on mapping and make sure, you have proper mapping between columns.

12. Go back to control flow and drag one more data flow task and rename it to "Lookup Data"

13. Connect 1st DFT to 2nd DFT. It should look like this.

14. Go to Lookup Data and add OLEDB source which should point to LookupDemo table

15. Drag Lookup Transformation and connect it with source

16. Select "Full Cache" in General tab of Lookup Transformation. Select "Cache Connection"

17. Select created connection manager and in Connection tab

18. Do column mappings as shown below.

19. Drag OLEDB destination and connect it with Lookup Transformation. make sure to choose "Lookup Match output"

20. OLEDB destination should point to LookupDemoOutput table

20. Column mapping should be like this.

21. Now execute the package.

22. Package will be failed at lookup transformation

23. Reason of failure.

- Source table has one record with INDIA which is not available in Reference table, this signifies that

Lookup transformation is CASE-SENSITIVE

- Lookup Transformation is different then Merge Join here. Merge join will ignore the rows if they are not

matching, but Lookup will throw an error.

24a. Open Lookup Transformation and change settings like this.

24b. Now drag Derived Column Transformation and connect it with Lookup Transformation. Make sure Lookup No Match Output gets displayed on the arrow.

25. In Derived Column, add a new column like this. Make sure to cast it into DT_STR

26. Remove the connection between OLEDB Destination and Lookup Transformation.

26. Now drag "Union All Transformation" and connect it with Lookup Transformation. Make sure Lookup Match Output gets displayed on the arrow.

27. Select "Invalid Column" in last row, last column

28. Connect Union All transformation to OLEDB destination

29. Whole DFT will look like this.

30. Execute the package.

31. We can see that 1 rows has been redirected as "No match output" and finally using Union All it is reaching to destination.

32. Result table is looking like this.

33. This signifies following things.

- Lookup Transformation is Case-sensitive

- If 2 reference records are existing then Lookup Trans. picks up only 1st record.

- Lookup Trans. fails if joining key column is not in reference table in case we haven't configure No

Match output.

Merge Transformation in SSIS

In this article, we’ll look at another type of component: the

Merge Join transformation. The Merge Jointransformation lets us join data from more than one data source, such as relational databases or text files, into a single data flow that can then be inserted into a destination such as a SQL Server database table, Excel spreadsheet, text file, or other destination type. The Merge Join transformation is similar to performing a join in a Transact-SQL statement. However, by using SSIS, you can pull data from different source types. In addition, much of the work is performed in-memory, which can benefit performance under certain condition.

In this article, I’ll show you how to use the

Merge Join transformation to join two tables from two databases into one data flow whose destination is a single table. Note, however, that although I retrieve data from the databases on a single instance of SQL Server, it’s certainly possible to retrieve data from different servers; simply adjust your connection settings as appropriate.

You can also use the

Merge Join transformation to join data that you retrieve from Excel spreadsheets, text or comma-separated values (CSV) files, database tables, or other sources. However, each source that you join must include one or more columns that link the data in that source to the other source. For example, you might want to return product information from one source and manufacturer information from another source. To join this data, the product data will likely include an identifier, such as a manufacturer ID, that can be linked to a similar identifier in the manufacturer data, comparable to the way a foreign key relationship works between two tables. In this way, associated with each product is a manufacturer ID that maps to a manufacturer ID in the manufacturer data. Again, the Merge Join transformation is similar to performing a join in T-SQL, so keep that in mind when trying to understand the transformation.Preparing the Source Data for the Data Flow

Before we actually set up our SSIS package, we should ensure we have the source data we need for our data flow operation. To that end, we need to two databases:

Demo and Dummy. Of course, you do not need to use the same data structure that we’ll be using for this exercise, but if you want to follow the exercise exactly as described, you should first prepare your source data.

To help with this demo, I created the databases on my local server. I then copied data from the

AdventureWorks2008 database into those databases. Listing 1 shows the T-SQL script I used to create the databases and their tables, as well as populate those tables with data.USE master;

GO

IF DB_ID('Demo') IS NOT NULL

DROP DATABASE Demo;

GO

CREATE DATABASE Demo;

GO

IF DB_ID('Dummy') IS NOT NULL

DROP DATABASE Dummy;

GO

CREATE DATABASE Dummy;

GO

IF OBJECT_ID('Demo.dbo.Customer') IS NOT NULL

DROP TABLE Demo.dbo.Customer;

GO

SELECT TOP 500

CustomerID,

StoreID,

AccountNumber,

TerritoryID

INTO Demo.dbo.Customer

FROM AdventureWorks2008.Sales.Customer;

IF OBJECT_ID('Dummy.dbo.Territory') IS NOT NULL

DROP TABLE Dummy.dbo.Territory;

GO

SELECT

TerritoryID,

Name AS TerritoryName,

CountryRegionCode AS CountryRegion,

[Group] AS SalesGroup

INTO Dummy.dbo.Territory

FROM AdventureWorks2008.Sales.SalesTerritory;

Listing 1: Creating the Demo and Dummy databases

As Listing 1 shows, I use a

SELECT…INTO statement to create and populate the Customer table in the Demo database, using data from the Customer table in the AdventureWorks2008 database. I then use a SELECT…INTO statement to create and populate the Territory table in the Dummy database, using data from the SalesTerritory table in theAdventureWorks2008 database.Creating Our Connection Managers

Once we’ve set up our source data, we can move on to the SSIS package itself. Our first step, then, is to create an SSIS package. Once we’ve done that, we can add two

OLE DB connection managers, one to the Demo database and one to the Dummy database.

To create the first connection manager to the

Demo database, right-click the Connection Manager window, and then click New OLE DB Connection, as shown in Figure 1.

Figure 1: Creating an

OLE DB connection manager

When the

Configure OLE DB Connection Manager dialog box appears, click New. This launches the Connection Manager dialog box, where you can configure the various options with the necessary server and database details, as shown in Figure 2. (For this exercise, I created the Demo and Dummy databases on the ZOO-PC\CAMELOT SQL Server instance.)

Figure 2: Configuring a new connection manager

After you’ve set up your connection manager, ensure that you’ve configured it correctly by clicking the

Test Connection button. You should receive a message indicating that you have a successful connection. If not, check your settings.

Assuming you have a successful connection, click

OK to close the Connection Manager dialog box. You’ll be returned to the Configure OLE DB Connection Manager dialog box. Your newly created connection should now be listed in the Data connections window.

Now create a connection manager for the

Dummy database, following the same process that you used for the Demodatabase.

The next step, after adding the connection managers to our SSIS package, is to add a

Data Flow task to the control flow. As you’ve seen in previous articles, the Data Flow task provides the structure necessary to add our components (sources, transformations, and destinations) to the data flow.

To add the

Data Flow task to the control flow, drag the task from the Control Flow Items section of the Toolbox to the control flow design surface, as shown in Figure 3.

Figure 3: Adding a

Data Flow task to the control flow

The

Data Flow task serves as a container for other components. To access that container, double-click the task. This takes you to the design surface of the Data Flow tab. Here we can add our data flow components, starting with the data sources.Adding Data Sources to the Data Flow

Because we’re retrieving our test data from two SQL Server databases, we need to add two

OLE DB Sourcecomponents to our data flow. First, we’ll add a source component for the Customer table in the Demo database. Drag the component from the Data Flow Source s section of the Toolbox to the data flow design surface.

Next, we need to configure the

OLE DB Source component. To do so, double-click the component to open the OLE DB Source Editor, which by default, opens to the Connection Manager page.

We first need to select one of the connection managers we created earlier. From the

OLE DB connection managerdrop-down list, select the connection manager you created to the Demo database. On my system, the name of the connection manager is ZOO-PC\CAMELOT.Demo. Next, select the dbo.Customer table from the Name of the table or the view drop-down list. The OLE DB Source Editor should now look similar to the one shown in Figure 4.

Figure 4: Configuring the

OLE DB Source Editor

Now we need to select the columns we want to retrieve. Go to the

Columns page and verify that all the columns are selected, as shown in Figure 5. These are the columns that will be included in the component’s output data flow. Note, however, if there are columns you don’t want to include, you should de-select those columns from theAvailable External Columns list. Only selected columns are displayed in the External Column list in the bottom grid. For this exercise, we’re using all the columns, so they should all be displayed.

Figure 5: Selecting columns in the

OLE DB Source Editor

Once you’ve verified that the correct columns have been selected, click

OK to close the OLE DB Source Editor.

Now we must add an

OLE DB Source component for the Territory table in the Dummy database. To add the component, repeat the process we’ve just walked through, only make sure you point to the correct database and table.

After we’ve added our source components, we can rename them to make it clear which one is which. In this case, I’ve renamed the first one

Demo and the second one Dummy, as shown in Figure 6.

Figure 6: Setting up the

OLE DB source components in your data flow

To rename the