SSIS Basics: Setting Up Your Initial Package:

I started using SQL Server Integration Services (SSIS)

when I had a job that required me to move and manipulate data between

files and other data sources. I did a bit of research using the

resources available—Twitter, Simple-Talk, SQL Server Central, etc.—and

concluded that SSIS was the right way to go. I had to get my head around

it quite rapidly, but I couldn’t find help at the level I required. For

that reason, I noted the points where I had struggled so that, once I’d

learned more, I could help others who might otherwise struggle as I

did.What can you use SSIS for?

Essentially, SSIS can be used for any task related to files or data. You can, for example,- Move and rename files

- Delete, update or insert data

- Execute SQL scripts or stored procedures

- Import and export data between different file types, such as Access, Excel, or any data source that supports an ODBC connection

Getting Started

SSIS is available only in SQL Server 2005 onwards. You create and develop SSIS in SQL Server Business Intelligence Development Studio (BIDS), a visual development tool based on Microsoft Visual Studio. (BIDS has morphed into SQL Server Data Tools (SSDT) in SQL Server 2012.)Before going further, there is some terminology that’s important to understand. SSIS files are organized into packages, projects and solutions. The package is at the bottom of the hierarchy and contains the tasks necessary to perform the actual extract, transform, and load (ETL) operations. Each package is saved as a .dtsx file and is part of a project. You can include one or more packages in a project. That project, in turn, is part of a solution, which is at the top of the hierarchy. You can include one or more projects within a solution.

When you first open BIDS, you’re presented with the interface shown in Figure 1.

Figure 1: The SSIS interface in BIDS

To create an SSIS package, point to the File menu, point to New, and click Project. This launches the New Project dialog box, shown in Figure 2.

Figure 2: The New Project dialog box in BIDS

In the New Project dialog box, select the Integration Services Project template. Then, provide a name for the project in the Name text box. Next, in the Location text box, specify the folder where your project files should be saved, and then provide a name for the solution in the Solution Name text box.After you’ve entered the project and solution information, click OK. Your new package will open in the SSIS window, as shown in Figure 3.

Figure 3: Creating a new SSIS package in BIDS

Notice that the SSIS interface is divided into the following five sections (windows): - Control Flow Items: The components necessary to control a package’s workflow. For example, the section includes components that let you move or copy data, run SQL statements, or send emails. (The components will be explained in more detail in this article and in articles that will follow.)

- Connection Managers: The connections to your data sources (whether retrieving or loading data). Your data sources can include SQL Server databases, CSV files, Excel spreadsheets, and a variety of other sources.

- Solution Explorer: A hierarchical view of the data sources, data source views, packages, and other components included within the current solution.

- Properties: The properties and their values for the package or the selected component within that package.

- SSIS Designer: The main working area for developing your SSIS package. SSIS Designer is broken into four tabs: Control Flow, Data Flow, Event Handlers, and Package Explorer. We’ll look at each of these in greater detail as we progress through this series.

Control Flow Items

In this article, I focus on setting up the SSIS package and defining the data connections. I do not cover all the components in the Control Flow Items window. In the next article, I will demonstrate using, what I think is, the most important of these components—the Data Flow Task—and cover other control flow tasks in subsequent articles.Connection Managers

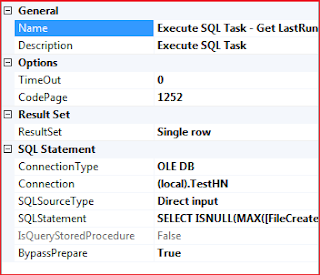

I will now explain how to create connection managers that connect to both Excel files and a SQL Server database. However, it is important to note that any connection created through the Connection Manager window is available only to the package it is created in.Connecting to an Excel File

One of the first steps you’ll often take when developing an SSIS package is to create the connection managers necessary to retrieve data from or load data into your data sources. You can also set up connections “on the fly,” so if you miss creating one here it can be done as part of other tasks. This approach is most commonly used when you wish to create a connection based on the source. For example, if you wish to copy data out of a SQL Server database and export it to an Excel spreadsheet, you can create the connection manager when you set up your data flow.To add a connection manager, right-click the blank area in the Connection Manager window, where it says Right-click here to add a new connection manager to the SSIS package, as shown in Figure 4.

Figure 4: Adding a connection manager to your SSIS package

This will launch a context menu that provides a number of

options for creating various types of connections, as Figure 5

illustrates.

Figure 5: Selecting which type of connection manager to create

Notice you can create connections for such sources as OLE DB,

ADO.NET, Analysis Services, and different types of files. In this case,

we want to create a connection to an Excel file, so click the New File Connection option. This will launch the File Connection Manager Editor dialog box, shown in Figure 6.

Figure 6: The File Connection Manager Editor dialog box

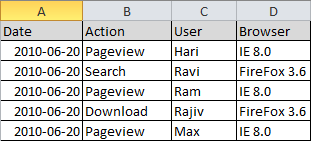

For this example, we’ll be connecting to an Excel file I

created for demonstration purposes. Figure 7 shows the worksheet I set

up in this file.

Figure 7: Excel worksheet used for demonstration purposes

I named the Excel file Employees.xlsx and saved it in the C:\Users\Annette\Documents folder.In the Usage type drop-down list in the File Connection Manager Editor dialog box, select Existing file. Next, click the Browse button, navigate to the folder that contains the Excel file, and select the file. The dialog box should now look like the one shown in Figure 8.

Figure 8: Configuring the File Connection Manager Editor dialog box

Once you’ve selected the file, click OK. The new connection manager will be added to the Connection Managers window and will be assigned the name of the file, as shown in Figure 9.

Figure 9: Viewing the new connection manager in the Connection Managers window

It is very easy to rename the connection manager to something

that may be more appropriate. To do so, right-click the new connection

manager and select Rename from the context menu, as show in Figure 10

Figure 10: Renaming a connection manager

The name then becomes updateable and you can rename it to whatever you like. In this case, I renamed the connection manager Employees (Excel), as shown in Figure 11.

Figure 11: Viewing the new connection manager name

When you view a connection manager in the Connection Managers window, you’ll see that each connection type is associated with a different icon. If you created an Excel connection from here, it is displayed with the same icon used for any flat file connection. However if you create an Excel connection when adding a component to the Data Flow tab, the connection manager will display an Excel Icon. Connecting to a SQL Server Table

Because our example will retrieve data from a SQL Server database, you’ll also need to create a connection manager for that database. To do so, you should again right-click the Connection Managers window to open the context menu, but this time, click the New OLE DB Connection option. The Configure OLE DB Connection Manager dialog box will appear, as shown in Figure 12.

Figure 12: Creating an OLE DB connection manager

If any OLE DB connections have already been defined on the package, they will appear in Data connections list. You can use one of these, if it fits your needs, or you can create a new one. To create a new connection, click the New button to launch the Connection Manager dialog box, shown in Figure 13.

Figure 13: Configuring an OLE DB connection manager

To configure the connection manager, select the SQL Server instance from the Server name drop-down list, and then select the authentication type. In this case, I selected the Use SQL Server Authentication option and provided a username and password. You might decide to select the Use Windows Authentication

option, in which case your current Windows credentials will be used to

establish the connection with SQL Server. In a later article, when we

look at deploying the package, we will look at how the connections can

be altered at run time and therefore how the login details can be

changed then. For now, ensure that you set up the login the way you need

it to run the package while you’re developing it.From the Select or enter a database name drop-down list, select the name of the AdventureWorks database. Your Connection Manager dialog box should now look similar to the one shown in Figure 14.

Figure 14: Configuring an OLE DB connection manager

Be sure to click the Test Connection

button to verify that you can connect to the target database. The

system will display a message similar to the one in Figure 15 to confirm

whether you’ve successfully connected to the database.

Figure 15: Testing your database connection

After you’ve confirmed your connection, click OK to close the message box, and then click OK to close the Connection Manager dialog box. You will be returned to the Configure OLE DB Connection Manager dialog box, shown in Figure 16.

Figure 16: Finalizing your OLE DB connection manager

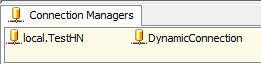

Notice that your new connection has been added to the Data connections list. Click OK to close the dialog box. The Connection Managers window will show your two connections. You’re now ready to start working with them.Solution Explorer

Within Solution Explorer, you can view all projects, packages, data sources and data source views that make up the solution.Adding New Projects

If you wish to add an additional project to a package, point to File on the menu bar, point to Add, and click New Project, as shown in Figure 17.

Figure 17: Adding a new project to a solution

The Add New Project window opens. Select Integration Services Project and in the Name box enter the name you wish to call the new project as shown in Figure 18.

Figure 18: Add New Project Wizard

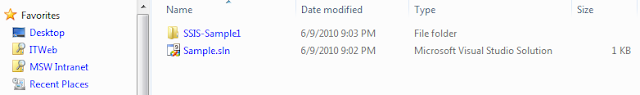

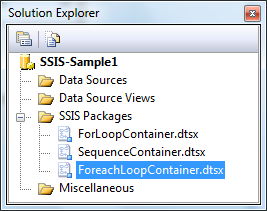

As you can see in Figure 19 a new project is added to the solution named “Dev” and will appear in Solution Explorer. The project will contain three empty folders named Data Sources, Data Source Views and Miscellaneous. The project will also contain a folder named SSIS Packages and within the folder a file named Package.dtsx, which is an empty SSIS package created automatically when the project is created. Figure 19 shows the new project and its folders in Solution Explorer.

Figure 19: The folders and package created in a new SSIS project

Data Sources

Earlier I showed you how to create connections in the Connection Managers window. As I mentioned, if a connection is created in the Connection Managers window, it is available only to the package it was created in. However, you can also create connections known as data sources, which are available to all packages in a project.To create a new data source, right-click Data Sources in Solution Explorer to open the Connection Manager dialog box (shown in Figure 20). Then fill in the options as you did when you created an OLE DB connection manager. Be sure to click Test Connection to confirm the connection has been created successfully.

Figure 20: Creating a new data source connection

The Data Source Wizard will appear, with the new data connection highlighted, as shown in Figure 21. After you review the settings, click Next.

Figure 21: The data connection in the Data Source Wizard

When the next

page of the wizard appears, type in a name for the data source. As this

is project wide, I would recommend you fully describe the source using

the server and database name. I have renamed my data source RGTest_AdventureWorks2008, as shown in Figure 22. I try to set up and follow consistent naming conventions.

Figure 22: Renaming the data source

After you’ve renamed the data source, click Finish. Your Data Source should now be listed under Data Sources in Solution Explorer,

as shown in Figure 23. Notice that the data source is saved with the

.ds file extension to indicate that it is indeed a data source.

Figure 23: Creating a data source in Solution Explorer

Initially, the new data source is not listed in your package’s Connection Managers

window; however, it is available to your package. Once you have made

use of the data source in the package it will be visible in the Connection Managers window.Data Source ViewsData source views, like data sources, are available to all packages in a project. A data source view is used to define a subset of a data from a data source. The data source view can include only some of the tables or it can be used to define relationships or calculated columns.

Because a data source view is based on a data source, you would normally create the data source before starting to create the data source view. However, this is not compulsory because you can create the data source when you’re creating the data source view. To create a data source view, right-click the Data Source Views folder and click New Data Source View, as shown in Figure 24.

Figure 24: Creating a data source view in Solution Explorer

When the Data Source View Wizard appears, click Next. The next page shows the data sources available to the project, as shown in Figure 25. (In this case, there’s only one.)

Figure 25: Available data sources

As you can see, the page shows the name of the data source in the Relational data sources list. The properties for the selected data source appear to the right, in the Data source properties

window. A data source must be selected before you can continue with the

wizard. If you haven’t created the data source you need, you can create

one now by clicking the New Data Source button.Once you’ve selected the necessary data source, click Next. The new page provides a list of the tables and views available through the selected data source. If you wish to filter the list, type the filter criteria in the Filter text box below the Available Objects list, and then click the filter icon to the right of the text box. For example, I typed Emp in the Filter text box, which reduced the list of available objects to those that contain “Emp” in their name, as shown in Figure 26.

Figure 26: Filtering tables and views in the data source

The next step is

to determine which tables and views you want to include in your data

source view. From the filtered list of tables and views in the Available Objects

list, select the objects you want to include. You can select more than

one object by clicking the first one, holding down the Ctrl key, and

then clicking the additional objects. Once you’ve selected the objects,

click the single right arrow button to move those objects to the Included Objects window. If you want to move all the listed objects, simply click the double right arrow button.Once an object has been moved to the Included Objects list, the single left arrow button and double left arrow button become active. These work the same as the right arrows. The single left arrow moves a single selected object or multiple selected objects from the Included objects list back to the Available objects list. The double left arrow moves all objects in the Included objects list back to the Available objects list.

Figure 27 shows the full list of available objects (without filters), minus the two objects that have been moved to the Included objects list. Notice that two additional objects are selected in the Available objects window. As you would expect, you can move the files to the Included objects list by clicking the single right arrow button.

If you click the Add Related Tables button beneath the Included objects list, all tables related to the objects in the Included objects list will be automatically added.

Figure 27: Adding tables and views to a data source view

Once all required objects have been selected, click Next.

You can now see a preview of what you have selected, and you can rename

the data source view to something more appropriate. If you have missed

an object, click the Back button to return to the previous page. For this example, I renamed my data source view AW2008-Employees. As you’re changing the name in the Name textbox, the name is also updated in the Preview window, as shown in Figure 28.

Figure 28: Renaming the data source view

If you are happy with the configuration, click Finish. The data source view is saved with the .dsv file extension and is added to the Data Source Views folder in Solution Explorer. A new window appears in SSIS Designer and shows the data source view in design mode, as shown in Figure 29.

Figure 29: Data source view in design mode

Amending a Data Source View

SSIS provides a number of options for modifying a data source view. Most of those options are at the table level. If you right-click the table name either on the design surface or in the Tables pane (on the left side of the screen), you can choose from the following options:- Adding a calculation

- Adding a relationship

- Replacing a table

- Deleting a table

- Reviewing data

Deleting an object

Suppose I added the Store table in error. I can delete from table from my data source view by right-clicking the table name and selecting the Delete table from DSV option, as shown in Figure 30.

Figure 30: Deleting a table from a data source view

You’ll then be prompted to confirm your deletion. When the Delete Objects message box appears, click OK, as shown in Figure 31.

Figure 31: Deleting objects from a data source view

When you click OK, the object is permanently removed from the data source view.

Adding a new column

To add a calculated column to a data source view, right-click the table name and select New Named Calculation to open the Create Named Calculation dialog box. Enter the new column name in the Column name text box, add an appropriate description in the Description text box, if required, and then create the calculation in the Expression text box. For this example, I’ve assigned the name Age to the column and added the description Current Age based on Birth Date. For the expression, I added the one shown in Figure 32. Note that, at this stage, there is no way to test whether your code is correct!

Figure 32: Creating a calculated column

Figure 33 shows us that the Age column has been added to our table. The icon next to the column shows that it is a calculated column.

Figure 33: Verifying that the calculated column has been added

To view the data

in the table and verify that the new column has been created correctly,

right-click one of the columns and then click Explore Data, as shown in Figure 34.

Figure 34: Viewing the data in the table

The Explore Employee Table window appears, as shown in Figure 35. We can now view all the data in the Employee table. Notice that the Age column has been added to the table (on the far right side) and displays the data returned by our expression.

Figure 35: Viewing data in the Employee table

Once you have

made all the necessary changes, save the data source view. It will then

be available for you to use in any of your packages in the project.Summary

In this article, I’ve shown you how to create an SSIS package and set up connection managers, data sources, and data source views. In the next article, I will show you how to set up a package that retrieves data from a SQL Server database and loads it into an Excel file. I will also show you how to add a derived column that calculates the data to be inserted into the file. In addition, I will demonstrate how to run the package.In future articles, I plan to show you how to deploy the package so it can be run as part of a scheduled job or called in other ways. I also plan to cover how to use variables and how they can be passed between tasks. I also aim to cover more control flow tasks and data flow components, including those that address conditional flow logic and for-each looping logic. There is much much more that can be done using SSIS, and I hope over the course of this series to cover as much information as possible.

SSIS BASICS:Foreach Loop Container:

In this article, we look at another control flow component: the Foreach Loop container. You can use the container to loop through a set of enumerated items such files in a directory or rows in a table. The container points to whatever you wish to loop through and provides the mechanism necessary to carry out the looping.

Within the container, you can add one or more control flow tasks (or other containers). For example, you might add an ExecuteSQL task to run a T-SQL statement for each enumerator or a DataFlow task to process data related to each item.

In this article, I show you how to use the Foreach Loop container to copy files from one folder to another. We will start with a new SSIS package, so you don’t have to try to work with a previous setup. However, before configuring the package, you’ll need a set of samples files to copy. Select a drive on your local system and then create four text files. On my system, I created the following four files in the C:\Desktop\Annette\Articles\Demo folder:

- File1.txt

- File2.txt

- File3.txt

- Test1.txt

Once you’ve created your files, you’re ready to start building your package.

Setting up the Foreach Loop Container

Our first step is to drag the Foreach Loop container from the Toolbox to the control flow design surface, as shown in Figure 1.

Figure 1: Getting started with the Foreach Loop container

When adding the container to the control flow, you’ll see that it looks different from control flow tasks, such as ExecuteSQL. The Foreach Loop container will display the name of the container at the top with an empty box beneath it, as shown in Figure 2.

Figure 2: The Foreach Loop Container

To configure the Foreach Loop container, double-click the container to launch the Foreach Loop Editor. The editor includes the following four pages: - General

- Collection

- Variable Mappings

- Expressions

General Page

The General page includes the Name and Description properties, which you should define as you see fit. I’ve named my container MoveFiles and given it the description Containertomovefiles, as you can see in Figure 3.

Figure 3: Configuring the General page in the Foreach Loop Editor

That’s all there is to the General

page. The important point to remember is to provide a meaningful name

and description so that other developers can understand what’s going on.

Collection Page

Next, we move to the Collection page, which is where we select the enumerator type and configure any properties associated with that type. Figure 4 shows what the page looks like when you first access it.

Figure 4: Configuring the Collection page in the Foreach Loop Editor

The enumerator determines the type of objects that we plan to

enumerate. (Enumerate refers to the process of going through a

collection of items one-by-one.) The ForeachLoop container supports the following enumerator types: - Foreach File Enumerator: Enumerates files in a folder

- Foreach Item Enumerator: Enumerates items in a collection, such as the executables specified in an Execute Process task.

- Foreach ADO Enumerator: Enumerates rows in a table, such as the rows in an ADO recordset.

- Foreach ADO.NET Schema Rowset Enumerator: Enumerates schema information about a data source.

- Foreach From Variable Enumerator: Enumerates a list of objects in a variable, such as an array or ADO.NET DataTable.

- ForeachNodeList Enumerator: Enumerates the result set of an XML Path Language (XPath) expression.

- Foreach SMO Enumerator: Enumerates a list of SQL Server Management Objects (SMO) objects, such as a list of views in a database.

So the first thing we must do is to click in the Foreach File Enumerator listing. When the drop-down arrow appears to the right of the row, re-select Foreach File Enumerator. The options we must configure are then displayed on the Collection page, as shown in Figure 5.

Figure 5: Selecting the Foreach File Enumerator type

For this exercise, we don’t need to define an expression, so we can leave the Expressions text box empty. The next step, then, is to configure the properties in the Enumeratorconfiguration section. The first property we’ll configure is the Folder property. Click the Browse button to the right of the property. When the BrowseForFolder dialog box appears, navigate to the folder that contains the sample files you created for this exercise. On my system, I saved those files to the Desktop\Annette\Articles\Demo folder, as shown in Figure 6.

Figure 6: Navigating to the folder that contains your files

Once you have found the correct folder, select it and click on OK. When you’re returned to the ForeachLoopEditor, the folder will be listed in the Folder property. Next, we need to configure the Files property. This is where we specify which files to include in our list of enumerated files. For this exercise, we’ll copy only those files whose names start with “File.” In the Files text box, enter File*.txt. The asterisk wildcard (*) let’s us include any text file that starts with “File,” without having to specify each file. If our files had instead been Word files, we would have entered File*.doc. If we were moving multiple file types, we would have used File*.* as our property value.

Next, we need to select one of the following options in the Retrieve file name section:

- Fully qualified: The fully qualified file name should be returned when a file in the enumerated list is being referenced.

- Name and extension: The file name with its extension should be returned when a file in the enumerated list is being referenced.

- Name only: The file name without its extension should be returned when a file in the enumerated list is being referenced.

The only other option on the Collection page is Traverse subfolders. If there were subfolders that we wanted to include in our collection, we would select this option. But for now, we can leave it deselected. The Collection page should not look similar to the one shown in Figure 7.

Figure 7: Configuring the properties on the Collection page

Once we’ve defined our collection (the list of files), we’re ready to configure the VariablesMappings page. Variable Mappings Page

On the Variable Mappings page, we map our collection to a user-defined variable. The variable will point to a specific file each time the Foreach Loop container loops through a collection. Figure 8 shows what the VariableMappings page looks like when you first access it.

Figure 8: The Variables Mapping page in the Foreach Loop Editor

Because we have not yet created a variable to map to our collection,

we need to create one now. For this exercise, we’ll create the variable

from the VariableMappings page. In the Variable column of the first row of the grid, click <New Variable…> to launch the Add Variable dialog box, shown in Figure 9.

Figure 9: Adding a variable to associate with our collection

Configure each property in the AddVariable dialog box as follows: - Container: Select the name of the current SSIS package. You want the variable to be created at the package scope.

- Name: Type a name for your variable. I used FileName.

- Namespace: Stick with the default: User.

- Value Type: Select String if it’s not already selected.

- Value: Leave this blank. It will be populated with the name of the current file each time the Foreach Loop container loops through your collection of files.

- Read only: Do not select this checkbox. SSIS must be able to write to the variable.

The Variable Mappings page should now look similar to the one shown in Figure 9. Notice that the value User::FileName appears in the Variable column and the value 0 in the Index column. The Index value is specific to the ForeachItem enumerator and does not apply in this case. So we’ll stick with 0, the default value. Click OK to close the ForeachLoopEditor.

Figure 10: Configuring the Variables Mapping page

You can verify that the FileName variable has been created by viewing it in the Variables window. If the window is not visible, right-click the control flow design surface and then click Variables. The variable should be listed as one of the user variables. Adding a Variable

By creating the FileName variable, we have provided a way to identify our source file each time the ForeachLoop container iterates through our collection. We now need to create a variable that identifies our destination folder. In the Variables window, click the AddVariable button. In the new row that is added to the grid, configure each column as follows:- Name: Type a name for your variable. I used DestinationFolder.

- Scope: This should be the name of your package. You want the variable to be at the package scope.

- Data Type: Select String.

- Value: Type the name of the destination folder where you want to copy your files. I used C:\Users\Annette\Articles\Archive.

Figure 11: Adding a variable to the Variables window

We have now set up the files we want to enumerate and the variable

that will hold the path name of each source file. Our next step is to

set up the connection manager necessary to connect to our source files

in order to copy them to our destination folder. Adding the Connection Manager

To connect to our source files, we must set up a File connection manager that points to those files. Right-click the ConnectionManagers window, and then click New File Connection. When the File Connection Manager Editor appears, verify that Existingfile is selected in the Usage type drop-down list. For the File option, enter the full path name to the first text file (File1.txt) or click the Browse button, navigate to the file, and select it from the source folder. When you’re finished, the FileConnectionManagerEditor should look similar to the one shown in Figure 12.

Figure 12: Configuring the File Connection Manager Editor

Click OK to close the FileConnectionManagerEditor. The new connection manager should now be displayed in Connection Managers window. If you want, you can rename your connection manager. I renamed mine SourceFile. We now need to configure the SourceFile connection manager to use with our ForeachLoop container. To do so, we must add a property expression to the connection manager that uses the FileName variable to connect to our file. A property expression is an expression that defines a property value in place of a hard-coded value.

Right-click the SourceFile connection manager, and then click Properties. The Properties window should now be visible and should look similar to the one shown in Figure 13. The Properties window displays the properties and their values for the selected object. Because we opened the Properties window from the SourceFile connection manager, the displayed properties are specific to that connection manager.

Figure 13: The SourceFile connection manager properties

Click the Expressions property so that the browse button (the ellipses) appears on the right-hand side, then click the browse button to open the Property Expressions Editor, as shown in Figure 14:

Figure 14: Creating a property expression on the Connection String property

In the first row of the grid, select Connection String in the Property column. Then click the browse button at the far right of that row (to the right of the Expression column). This launches the Expression Builder dialog box. In the top-left window, expand the Variables node and drag the User::FileName variable to the Expression text box, as shown in Figure 15.

Figure 15: Defining a property expression

That’s all there is to it. Click OK to close the Expression Builder dialog box. The Property Expressions Editor should now look similar to the one shown in Figure 16. Click OK to close the editor.

Figure 16: Defining a property expression on the Connection String property

Once we’ve configured our File connection manager, we’re ready to run the ForeachLoop

container. However, as it stands now, the container won’t do anything

but loop through the list of files, without taking any actions on those

files. To actually copy the files to the destination folder, we need to

add a FileSystem task to the container. Adding a File System Task

To add the FileSystem task to the Foreach Loop container, drag the task from the Toolboxinto the container, as shown in Figure 17.

Figure 17: Adding the File System task to the Foreach Loop container

Notice that, on the FileSystem

task, there is a red circle with a white X. This indicates that the

task has not yet been set up, so we need to take care of that. Double-click the task to open the File System Task Editor. The editor includes a number of configurable properties, as shown in Figure 18.

Figure 18: Configuring the File System task

For each property in the File System Task Editor, set the value as follows: - IsDestinationPathVariable: Because we’ll be using a variable to specify the destination folder, set this property to True.

- DestinationVariable: This property is activated when you set the IsDestinationPathVariable property to True. From the drop-down list, select User::DestinationFolder variable—or whatever variable you created for the destination folder.

- OverwriteDestination: If there is already a file in the destination folder with the same name as the file you’re copying, the copy operation will fail if this property is set to False. Set the property to True if you want to overwrite the file in the destination folder. For the purposes of this example, I set my system to False. That way you can rerun the package multiple times without having to worry about deleting the files from the destination folder.

- Name: This property lets you specify the name you want to assign to this task. I used Copy Demo file.

- Description: This property lets you specify the description you want to assign to this task. I used Copying a file from the Demo folder.

- Operation: This property determines the operation you want the task to perform. The Operation property lets you choose from the following options:

- CopyFile

- CreateDirectory

- DeleteDirectory

- DeleteDirectoryContent

- DeleteFile

- MoveDirectory

- MoveFile

- RenameFile

- SetAttributes

- IsSourcePathVariable: If you use a variable to specify the source file, you should set this property to True. However, in our case, the ForeachLoop container uses the variable to specify the source file, not the FileSystem task itself, so we will stick with False.

- SourceConnection: This property identifies the connection manager used to connect to the source files. Select the File connection manager you set up previously. On my system, it’s SourceFile.

Figure 19: The File System Task Editor

Now click on OK. Your package is now ready. To test it click on the

green execute arrow. Once this is complete you should be able to see the

files have now been copied into the folder specified in the

destination. Summary

In this article, we used the Foreach Loop container to iterate through a set of files in a folder and move them one-at-a-time to another folder. To make that possible, we configured a property expression on out File connection manager to identify each file as we looped through the folder. Note, however, that the Foreach Loop container is not limited to files. For example, we could have used the container to process rows in a table one-by-one or other items that can be listed and grouped together. In the future articles, we’ll look at how to use the Foreach Loop container to run other tasks in a loop, such as the Script task.

SSIS Basics: Using the Execute SQL Task to Generate Result Sets:

The Execute SQL Task of SSIS is extraordinarily

useful, but it can cause a lot of difficulty for developers learning

SSIS, or only using it occasionally. What it needed, we felt, was a

clear step-by-step guide that showed the basics of how to use it

effectively. Annette has once again cleared the fog of confusion.

The Execute SQL

task is one of the handier components in SQL Server Integration

Services (SSIS) because it lets you run Transact-SQL statements from

within your control flow. The task is especially useful for returning

result sets that can then be used by other components in your SSIS

package.

When using the Execute SQL

task to return a result set, you must also implement the necessary

variables and parameters to pass data into and out of the T-SQL

statement called by the task. In this article, we look at how to use

those variables and parameters in conjunction with the Execute SQL task in order to transfer that data. (In the previous article in this series, “Introducing Variables,” I explained how to work with variables, so refer back to that article if you need help.) This article walks you through two different scenarios for working with variables, parameters, and result sets. In the first scenario, we’ll use two Execute SQL tasks. The first task retrieves a single value from a table in the AdventureWorks2008 database. That value is returned by the task as a single-row result set. The second Execute SQL task will pass that value into a stored procedure that inserts the row into a different table.

The second scenario uses a single Execute SQL task to retrieve a multi-row result set, also known as a full result set. This represents the third Execute SQL task we’ll be adding to our control flow. For now, all we’ll do is use this task to save the result set to variable. In articles to follow, you’ll see how you can use that variable in other SSIS components, such as the Foreach Loop container.

Setting Up Your Environment

Before adding components to your SSIS package, you should first add a table and two stored procedures to the AdventureWorks2008 database. The table will store the value that’s returned by the first Execute SQL task. Listing 1 shows the T-SQL necessary to create the SSISLog table.

CREATE TABLE SSISLog(ID INT IDENTITY,DateRun DATETIME,Result INT)

Listing 1: Creating the SSISLog table

Next, we will add a stored procedure to insert data into the SSISLog table. Listing 2 provides the T-SQL script necessary to create the UpdateSSISLog stored procedure. Notice that it includes an input parameter. The input will be the data that will be retrieved via the first Execute SQL task.

CREATE PROCEDURE UpdateSSISLog @EmpNum INT

AS

INSERT INTO SSISLog(DateRun, Result)SELECT GETDATE(),EmpNum

AS

INSERT INTO SSISLog(DateRun, Result)SELECT GETDATE(),EmpNum

Listing 2: Creating a stored procedure that inserts data into the SSISLog table

Once you’ve set up the table and stored procedures, you can create

your SSIS package, if you haven’t already done so. We’ll perform both

exercises in a single package. Our next step, then, is to add a couple

variables to our package. Adding Two Variables to the SSIS Package

The first variable we’ll create is the EmpNum variable. If the Variables window is not open, right-click the Control Flow workspace, and then click V ariables. In the Variables window, add a new variable by clicking on the Add Variable icon.Name the new variable EmpNum, and ensure that the scope is set at the package level, as indicated by the package name. (In my case, I’ve stuck with the default name, which is Package.) Next, set the data type to Int32 and the value to 0, as shown in Figure 1. The Execute SQL task will use the variable to store the value it retrieves from the database.

Figure 1: The new EmpNum variable

Now create a second variable named EmployeeList. This variable should also be at the package scope. However, set the data type to Object.

We will be using this variable to store the full result set that we

retrieve in our second scenario, and SSIS requires that the variable use

the Object type to accommodate the multiple rows and columns. Adding a Connection Manager to the SSIS Package

The next step is to create a connection manager that points to the AdventureWorks2008 database. Right-click the Connection Manager s window, and then click New OLE DB Connection, as shown in Figure 2.

Figure 2: Creating a new OLE DB connection manager

When the Configur e OLE DB Connection Manager dialog box appears, click the New button to launch the Connection Manager dialog box, shown in Figure 3. From the Server name drop-down list, select the name of your SQL Server instance, and then select an authentication type. From the Select or enter a database name drop-down list, select your database. As you can see in Figure 3, I’m using 192.168.1.19/ Cambridge as my SQL Server instance, SQL Server Authentication as my authentication type, and the AdventureWorks2008 as my database.

Figure 3: Configuring an OLE DB connection manager

Be sure to test the connection by clicking the Test Connection button. If the connection is good, click OK to close the Connection Manager dialog box. When you’re returned to the Configure OLE DB Connection Manager dialog box, you’ll see that your new connection has been added to the Data connections section. Click OK to close the dialog box. Your connection should now be listed in Connection Managers window.

If you want, you can rename your connection manager to something more appropriate. To do so, right-click the connection, click R ename, and type in the new name. I renamed mine to AW2008, as shown in Figure 4.

Figure 4: Renaming a connection manager

Returning a Single-Row Result Set

As mentioned above, our first example uses two instances of the Execute SQL task. The first Execute SQL task will return a single-row result set, which in this case, will contain only one value. Note, however, that this is not a real-world example. I simply want to show you how to get the result set and use it.In this example, we’ll retrieve the highest BusinessEntityID value from the HumanResources.Employee table and insert it into the SSISLog table, along with the current date and time. We’ll start by using the first Execute SQL task to retrieve the value and pass it to the EmpNum variable.

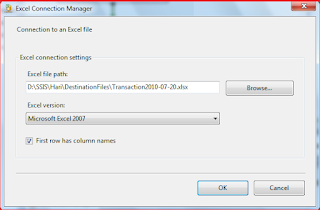

To get started, drag the Execute SQL task onto the Control Flow design surface. Then double-click the task to open the Execute SQL Task Editor. The editor opens to the General page, as shown in Figure 5.

Figure 5: The General page of the Execute SQL Task Editor

Notice that the General section contains the Name property and the Description property. The Name property refers to the task name. You should name the task something suitable. On my system, I named the task Get ResultSet. I then added a description to the Description property to explain what the task does. In the Options section, I stuck with the default property values.

The next section on the General page is Result Set. Notice that this section includes only the ResultSet property. The property lets you select one of the following four options:

- None: The query returns no result set.

- Singlerow: The query returns a single-row result set.

- Fullresultset: The query returns a result set that can contain multiple rows.

- XML: The query returns a result set in an XML format.

Next, we need to configure the properties in the SQL Statement section. Table 1 shows the values you should use to configure these properties.

| Property | Value |

| Connection | AW2008 (or whatever you named the connection manager you created earlier) |

| SQLSourceType | Direct input This means we’ll type the code straight in and not use a stored procedure. |

| SQLStatement | Because we’ve selected the Direct input option, we need to enter a

T-SQL statement for this option. I’ve used the following statement,

which returns a single value: SELECT MAX(EmployeeID) AS [MaxEmpID] FROM HumanResources.Employee |

| IsQueryStoredProcedure | This option is greyed out because we selected Direct input for the SQLSourceType property. Had we selected Stored Procedure, this property would be available and the SQLStatement property would be greyed out. |

| BypassPrepare | The property defaults to False. If you change the value to True, you can click the Parse Query button to verify that your T-SQL statement is valid. |

Table 1: Configuring the properties in the SQL Statement section

Our next step is to associate our result set value with a variable

that will store the value we retrieve from the database. To do this, go

to the Result Set page of the Execute SQL Task Editor. The main grid of the Result Set page contains two columns: Result Name and Variable Name. Click the Add button to add a row to the grid. In the Result Name column, enter the column name returned by your query (MaxEmpID). In the Variable Name column, select the User:: EmpNum variable. Your Result Set page should now look similar to the one shown in Figure 6.

Figure 6: Associating your result set value with a variable

If our single-row result set contains multiple columns, we would have

had to map a variable to each column. However, because we returned only

one value, we needed only one mapping. Once you’ve associated your result set value with a variable, click OK to close the Execute SQL Task Editor. You task should now be set up to return a single-row result set. Now we need to do something with that result set!

Working with a Single-Row Result Set

Our next step is to drag a new Execute SQL task onto our design surface so we can use the result set returned by the first Execute SQL task. So add the task, and then connect the precedence constraint (the green arrow) from the first task to the new one. Next, right-click the second task and click Edit to open the Execute SQL Task Editor, shown in Figure 7.

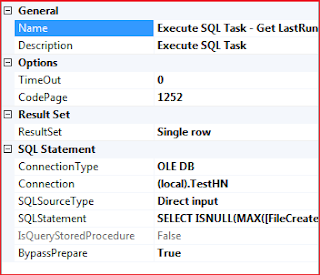

Figure 7: Configuring the Execute SQL Task Editor

In the General section, provide a name and description for the task. (I named the task Using Result Set.) For the ResultSet property, stick with the default value, None. In this case, the task won’t be returning a result set. Instead, we’ll be using the results returned by the previous task. Now let’s look at the SQL Statement section shown in Figure 8. Notice that, for the SQLStatement property, I entered the following T-SQL code:

exec UpdateSSISLog ?

As you can see, we’re executing the UpdateSSISLog stored procedure. Notice, however, that we follow the name of the stored procedure with a question mark (?).

The question mark serves as a placeholder for the parameter value that

the stored procedure requires. You cannot name parameters within the

actual query, so we have to take another step to provide our value. Go to the Parameter Mapping page of the Execute SQL Task Editor. On this page, you map the parameters referenced in your queries to variables. You create your mappings in the main grid, which contains the following five columns:

- Variable Name: The variable that contains the value to be used for the parameter. In this case, we’ll use the User:: EmpNum variable, which contains the result set value returned by the first Execute SQL task.

- Direction: Determines whether to pass a value into a parameter (input) or return a value through the parameter (output)

- Data Type: Determines the type of data provided from the variable. This will default to the type used when setting up the variable.

- Parameter Name: The name of the parameter. The way in which parameters are named depends on your connection type. When running a T-SQL statement against a SQL Server database through an OLE DB connection, as we’re doing here, we use numerical values to represent the statement’s parameters, in the order they appear in the statement, starting with 0. In this case, because there’s only one parameter, we use 0.

- Parameter Size: The size of the parameter if it can be a variable length. The default is -1, which lets SQL Server determine the correct size.

Figure 8: Mapping a variable to a parameter

When you’re finished configuring the Execute SQL task, click OK. Your package should now be ready to run. Click the green Execute button. When the package has completed running, query the SSISLog table and verify that a row has been added that contains the expected results.

Returning a Full Result Set

Using the Execute SQL task to return a full result set is similar to returning a single-row result set. The primary differences are that your target variable must be configured with the Object data type, and the task’s ResultSet property must be set to Full result set.Let’s run through an example to demonstrate how this works. This time, rather than retrieving a single value, we’re going to retrieve a result set that contains multiple rows and columns.

For this exercise, we can use the same SSIS package we used for the previous example, but keep in mind, if you execute the package, all components will run unless you specifically disable those that you don’t want to have run.

Drag an Execute SQ L task to the design surface. Open the task’s editor and configure the properties as necessary. Remember to set the ResultSet property to Full result set. For the SQLStatement property, use the SELECT statement shown in Listing 3. When entering a long SELECT statement into as the property’s value, it’s easier to click the ellipses button to the right of the property to open the Enter SQL Query dialog box and then entering the statement there.

SELECT DATEDIFF(YEAR, HireDate, GETDATE())E.BusinessEntityID,P.FirstName ,P.LastName ,E.JobTitle ,E.Gender FROM HumanResources.Employee EINNER JOIN Person.Person PON E.BusinessEntityID = P.BusinessEntityIDWHERE DATEDIFF(YEAR, HireDate, GETDATE()) >= 15

Listing 3: The SELECT statement used to return a full result set

After you enter your SELECT statement, close the Enter SQL Query dialog box. When you’re returned to the Execute SQL Task Editor, the General page should now look similar to the one shown in Figure 9.

Figure 9: Configuring the Execute SQL task to return a full result set

Next, go to Result Set page and add a row to the main grid. Because we’re returning a full result set, you should enter 0 in the Result Name column. (The same is true if you’re returning an XML result set). Then, in the Variable Name column, select the User:: E mployeeList variable. Once this is complete, click OK. Your Execute SQL task will now return a full result set and save it to the E mployeeList variable. (You should execute the task to make sure it runs.) You can then use that variable in other SSIS components. However, to do so is a bit more complicated than what we saw for a single-row result set, so I’ll save that discussion for another time. But feel free to try using the variable if your up for it. You might want to start with a Foreach Loop container.

Summary

In this article, I demonstrated how to use an Execute SQL task to return a single-row result set with a single value and save that value to a variable. I then showed you how to use the variable in a second Execute SQL task to insert data into a table.In the second example, I demonstrated how to use an Execute SQL task to return a full result set and save it to a variable configured with the Object data type. Although I did not show you how to use the result set in other components, you should now have a good sense of the principles behind using the Execute SQL task to retrieve result sets and saving them to variables.

In future articles, I’ll demonstrate how you can use those result sets in other components, such as the Script task and the Foreach Loop container.

SSIS Basics: Introducing Variables:

In the third of her SSIS Basics articles, Annette Allen

shows you how to use Variables in your SSIS Packages, and explains the

functions of the system-defined variables.

In previous articles in the SSIS Basics series, I showed you how to set up a SQL Server Integration Services (SSIS) package. I also showed you how to add a Data Flow task

that contains the components necessary to extract data from a SQL

Server database, transform the data, and load it into an Excel

spreadsheet.

In this article, I will show you how to use variables in your SSIS

package. Variables are extremely important and are widely used in an

SSIS package. A variable is a named object that stores one or more

values and can be referenced by various SSIS components throughout the

package’s execution. You can configure a variable so its value is

updated at run time, or you can assign a value to the variable when you

create it.

There are two types of variables in an SSIS package: system and user-defined. SSIS automatically generates the system variables when you create your package. Components can then reference the system variables as necessary, usually for debugging and auditing purposes. You create user-defined variables as needed when you configure your package.

Setting Up Your SSIS Package

To try out the examples in this article all you need to do is to create

an Excel spreadsheet named Employee, for the purposes of this article

it can be an empty file. This does follow on from the previous article

in this series “SSIS Basics: Adding Data Flow to Your Package” however it is adviseable to start from a new package.

Creating Variables

In the following sections, I will show you how to create a user-defined variable and reference it within your package. Then I will show you how to create an expression that dynamically updates the variable value at run time. Our starting point is where my previous article left off; however, all you need to do is create an Excel spreadsheet named Employee, I have saved it in the root of my d:\ drive but you can save it wherever appropriate, once this is created you should have no problems working through these examples.The Variables Window

The easiest way to create a user-defined variable is by using the Variables window. When you create a variable, you specify a name, data type, and scope.The scope controls which package elements can use the variable. You can define a variable’s scope at the package level or at the level of a specific container, task, or event handler. For example, if you configure a variable at the scope of a ForeachLoop container, only that container and any tasks within the container can use the variable. However, if you configure the variable at the package level, any component within the package can reference the variable. One of the most common issues to arise when working with variables is for them to have been inadvertently created at the scope of a container or task, when they should have been created at the scope of the package.

To view the Variableswindow, right-click the design surface on the ControlFlow tab, and clickVariables. By default, the Variables window appears to the left of the design surface, as shown in Figure 1.

Figure 1: Variables window in SSIS Designer

At the top of the Variables window, you’ll find five buttons (shown in Figure 2) and, beneath those, column headings.

Figure 2: Buttons at the top of the Variables window

From left-to-right, the buttons let you perform the following tasks:

- Adding a user-defined variable

- Deleting a user-defined variable (available when a variable has been created)

- Showing system variables

- Showing all variables

- Choosing the variable columns to display

- Name: The name assigned to the variable. When you first create your variable, the value Variable is used. If there is already a variable named Variable, a number is added to the name.

- Scope: The scope at which the variable should be set. The majority of the time, you can go with a package-level scope, which means the scope should be the name of the package. To select a different scope, you must select the applicable task or container when you create your variable. It is very easy to inadvertently create a variable at an unintended scope. Before creating a variable, be certain that the correct task or container is selected or, if you want a package-level scope, that no components are selected.

- DataType: The type of data that the variable can store. SSIS supports the following variable types:

- Boolean

- Byte

- Char

- DateTime

- DBNull

- Double

- Int16

- Int32

- Object

- SByte

- String

- UInt32

- UInt64

- Value: The variable’s initial value. This can be populated here or left blank and populated at run time.

Figure 3: Additional columns available for variables

As you can see, the Scope, Data type, and Value columns are selected by default. You can also select one of the following columns to display:

- Namespace: Shows whether the variable is a system or user-defined variable.

- Raise event when variable value changes:Boolean (True/False) field that if selected will fire an OnVariableValueChanged event if the variable value is modified. This will be covered in more detail in a future article when looking at error handling and debugging.

Creating a User-Defined Variable

In this section, I demonstrate how to create a variable and assign a value to it, which in this case, will be a file path. Using the Excel spreadsheet created in the setup, I will save a copy of the spreadsheet to a new folder using a variable to re-name it.To create a variable, click the white space on the Control Flow design surface to ensure that no components are selected, and then click the Add Variable button in the Variables window. A variable is added to the first row of the window’s grid, as shown in Figure 4.

Figure 4: A new variable in the Variables window

Notice in Figure 4 the blue button with the black X to the left of the

variable name. You can use this button to delete the variable. Also

notice that the scope is set to the package name, SSISBasics, which is the scope we want to use for this exercise.

By default, the variable is configured with the Int32 data type. However, for this exercise, we’ll use String. To change the data type, select String from the drop-down list in the Data Type column. When the data type is updated, the value in the Value column changes from 0 to an empty string.

You now need to enter the variable value. Simply type in the path name you plan to use for your Excel file. In this case, I used d:\Demo. I also changed the variable name to DestinationFilePath, as shown in Figure 5.

Figure 5: Configuring the new variable in the Variables window

You’re variable should now be ready to go. Because you created it with a

package scope, any component in your package can use that variable.

Using the Variable

I am now going to show you how to reference the new variable from within a File System task. First, you must add the task to the control flow, as shown in Figure 6.

Figure 6: Adding a File System task to the control flow

Then double-click the File System task to open the File System Task Editor, as shown in Figure 7.

Figure 7: Accessing the File System Task Editor

You now need to configure the task’s properties. Table 1 describes how to configure each of these properties

| Property | Description |

| IsDestinationPathVariable | Change to True. |

| DestinationVariable | Select the variable you created earlier from the drop-down list associated with this property. When the variable is added to the property, it is preceded by the namespace name and two colons (User::). |

| OverwriteDestination | If you want to overwrite the destination every time the package runs, select True, otherwise leave it as False. However, if you select False and run the package more than once, SSIS will generate an error. As the name of the excel spreadsheet will not change, I suggest you select True. |

| Name | The default name is File System Task. You can rename the task to better reflect what it does. For this exercise, I renamed the task Copy to new folder. |

| Description | You can type a full description here. I used Copy the Employee’s excel spreadsheet to d:\Demo\. |

| Operation | The default option is Copy File. Because that’s what we’ll do in this exercise, you can leave this option selected. But note that other options are available, and I will cover some of them in future articles. |

| IsSourcePathVariable | Leave this option set at its default value, False. Although we’ll be setting the destination to a variable, the source path will directly reference the original Excel file. |

| SourceConnection | This is where you select the connection to the source file you want to copy. From the drop-down list associated with this property, select as currently we have no connections set up. The Usage type: defaults to Existing File which is correct, as we have already created the spreadsheet, select the Browse button and browse to the Excel spreadsheet created called Employee.xlsx and click OK to accept. |

Table 1: Configuring the File System task properties

Figure 8 shows what your File System Task Editor should look like after you’ve configured the properties. Review your settings, and then click OK.

Figure 8: Configuring properties in the File System Task Editor

The control flow should now show that the inverted red X has been removed from the FileSystem task and that the task has been renamed to Copy to new folder.

To verify whether the task works, run the package by clicking on the green arrow (the Run button) on the menu bar. Then open Windows Explorer and check that the file has been copied into the target folder correctly.

Using an expression to define a variable value

In some cases, you might want to generate a variable’s value at run time, rather than assign a specific value, as we did in the previous exercise. This can be useful if the variable value needs to change during the package’s execution or that value is derived from other sources or processes. For example, the Foreach Loop container might use a variable whose value must change each time the container loops through the targeted list of objects. (I’ll explain how to use a variable in this way in a future article.)In this section, I demonstrate how to create an expression that dynamically generates a value for a String variable named DestinationFileName. The exercise uses the same test environment as the one in the previous exercise. We will again copy the original Excel file to the destination folder, only this time we’ll use an expression to define the path and file name. The expression will rename the file by tagging the date to the end of the filename, as in Employee_201265.xlsx.

Your first step, then, is to create a variable named DestinationFileName, Follow the same steps you used to create the DestinationFilePath variable in the previous exercise, but leave the value blank, as shown in Figure 9.

Figure 9: Creating the DestinationFileName variable

To populate the value, you’ll create an expression in the variable’s

properties. An expression is a formula made up of elements such as

variables, functions, and string values. The expression returns a single

value that can then be used by the variable.

To create an expression for the DestinationFileName variable, open the Properties window to display the variable’s properties. You can open the window by clicking Properties Window on the View menu. You can also open the Properties window by pressing F4. The Properties window displays the properties for whatever component is selected in your SSIS package. To display the properties for the DestinationFileNamevariable, select the variable in the Variables window.

When the variable’s properties are displayed in the Properties window, you can create an expression that defines the variable’s value. To do so, first set the EvaluateAsExpression property to True. This enables the variable to use an expression to define its value. Then click the browse button (…) associated with the Expression property, as shown in Figure 10.

Figure 10: Launching the Expression Builder dialog box

Clicking the browse button opens the Expression Builder dialog box, which is divided into three sections, as shown in Figure 11.

Figure 11: The Expression Builder dialog box

Notice in Figure 11 that I’ve labeled the three sections of the ExpressionBuilder dialog box as 1, 2, and 3:

- The variables available to use in your expression

- The functions and operators available to use in your expression

- The workspace where you create your expression

In the previous section, we created the DestinationFilePath variable, which held the target folder into which we copied the Excel file. In this exercise, we’ll again copy the original Excel file to the new folder as described earlier. To do this, we’ll use the DestinationFilePath variable as part of our expression to provide the path name for the new DestinationFileName variable.

As a result, the first step you should take to create your expression is to drag the DestinationFilePath variable to the Expression section, as show in Figure 12. If you click the Evaluate Expression button after you add the variable, the Evaluatedvalue field should show the value assigned to that variable, which is the path d:\Demo.

Figure 12: Adding the DestinationFilePath variable to your expression

Next, you’ll need to add to your expression the elements necessary to name the file. First, add a concatenation operator (+), and then add the string value Employee, enclosed in double quotes, as shown in the following script:

@[User::DestinationFilePath] + "Employee"

You can evaluate your expression at any time by clicking on the EvaluateExpression button. For instance, if you evaluate your expression at this point, you should receive the following results:

d\Demo\Employee

I will now show you how to include the date and time in the expression

so that the variable can include them. To create this part of the

expression, we’ll use the following functions:

- GETDATE: Returns the current date and time.

- YEAR: Returns the year component (as an integer) of a date value.

- MONTH: Returns the month component (as an integer) of a date value.

- DAY: Returns the day component (as an integer) of a date value.

- RIGHT: Returns the number of characters specified counting from the right of the string.

Note:

There are many ways to build the expression I am about to explain, but this is the method I use and find easiest.

There are many ways to build the expression I am about to explain, but this is the method I use and find easiest.

After we add each component, we can use the EvaluateExpression button to see what the value looks like to ensure that we’re happy with it up to that point.

Because we want the year to appear before the other data parts, we’ll start with that element of the expression. To add the year, we’ll use the YEAR function along with the GETDATE function. This will return a four-digit integer, which we’ll convert to a string.

As noted above, the GETDATE function returns the current timestamp. But we can apply the YEAR function to that timestamp to extract only the year value, as shown in the following code fragment:

YEAR(GETDATE())

This will return only the year, but as an integer. However, because we

will be concatenating that value with a string value, which need to

convert the year to a string, as the following code shows:

(DT_WSTR,4)YEAR(GETDATE())

Notice that, to convert the year value to a string, we must precede the YEAR

function with the target data type and length in parentheses. We can

then append this code with our original code, as shown in the following

script:

@[User::DestinationFilePath] + "Employee"

+ (DT_WSTR,4)YEAR(GETDATE())

Now if we use the Evaluate Expression button, the results should look like the following:

+ (DT_WSTR,4)YEAR(GETDATE())

d:\Demo\Employee2012

Next, we need to add the month to our expression. We will use the MONTH function with the GETDATE function in similar to how we used the YEAR function above. However, we must take extra steps to accommodate the fact that the MONTH

function returns a single digit for months prior to October and returns

two digits from the other months. When the function returns a single

digit, we need to precede the returned value with a 0

to ensure we always return two characters. That way, dates such as 15

June 2012 will be returned as 20120615, rather than 2012615, which makes

it easier to manage files in such applications as Windows Explorer.

The trick in doing this is to add the 0 only when we need it. That’s where the RIGHT function comes in. The function takes two arguments. The first argument is an expression that returns a character value. The second argument is the number of characters we want to extract from that value. However, those characters are extracted from right-to-left.

Let’s look at a couple examples of the RIGHT function to demonstrate how it works. The first one includes a simple expression that concatenates 0 and 6:

RIGHT("0"+"6",2)

The expression comprises everything before the comma. In this case, it

concatenates the two values (rather than adding them) and returns the

value 06. The second argument, 2,

specifies that only the right two characters be returned by the

function. Because the expression returned only two characters, the

function will return both of them.

However, suppose your expression returns more than two characters. In the following example, a single digit is concatenated with two digits:

RIGHT("0"+"12",2)

The expression in this case returns the value 012. However, the second argument specifies that the RIGHT function should return only the right two characters, so the function will return only 12.

Now let’s return to the expression we’re creating to generate the file name. At this point, we’re trying to add the month components. First, we need to extract the month and convert it to a string value, just like we did with the year:

(DT_WSTR, 2) MONTH(GETDATE())

As you would expect, this part of the expression will return a

one-digit or two-digit integer that represents the month. We can then

use this code within the RIGHT function to ensure that we always extract two digits:

RIGHT("0"+(DT_WSTR, 2) MONTH(GETDATE()) ,2)

Notice that the first argument is an expression that concatenates a 0

with the month returned by the other part of the formula. That means

the expression will also return a two or three characters, depending on

the month. However, because 2 is specified as the second argument, the RIGHT

function will return only the right two characters, thus ensuring that

the outer expression always includes two characters for the month.

We can then concatenate this code with our original expression, as shown in the following script:

@[User::DestinationFilePath] + "Employee"

+ (DT_WSTR,4)YEAR(GETDATE())+ RIGHT("0"+(DT_WSTR, 2) MONTH(GETDATE()) ,2)

After the month is added to our outer expression, we use the Evaluate Expression button to view the current value.. The results should look similar to the following:

+ (DT_WSTR,4)YEAR(GETDATE())+ RIGHT("0"+(DT_WSTR, 2) MONTH(GETDATE()) ,2)

d:\Demo\Employee201207

Next, we will add the day to our expression. Adding the day is similar to what we did to add the month, except that we use the DAY function, as shown in the following code:

@[User::DestinationFilePath] + "Employee"

+ (DT_WSTR,4)YEAR(GETDATE())+ RIGHT("0"+(DT_WSTR, 2) MONTH(GETDATE()) ,2)+ RIGHT("0"+(DT_WSTR, 2) DAY(GETDATE()) ,2)

As you can see, we’ve concatenated the day information with the rest of our expression. When you click the Evaluate Expression button, it should now return results similar to the following:

+ (DT_WSTR,4)YEAR(GETDATE())+ RIGHT("0"+(DT_WSTR, 2) MONTH(GETDATE()) ,2)+ RIGHT("0"+(DT_WSTR, 2) DAY(GETDATE()) ,2)

d:\Demo\Employee20120715

All that’s left to do is to add the Excel file extension. Simply add another concatenate operator, followed by .XLSX enclosed in double quotes. Our full expression should now be complete:

@[User::DestinationFilePath] + "Employee"

+ (DT_WSTR,4)YEAR(GETDATE())+ RIGHT("0"+(DT_WSTR, 2) MONTH(GETDATE()) ,2)+ RIGHT("0"+(DT_WSTR, 2) DAY(GETDATE()) ,2)+ ".XLSX"

If we click the Evaluate Expression button one more time, we should see the results we’ve been looking for:

+ (DT_WSTR,4)YEAR(GETDATE())+ RIGHT("0"+(DT_WSTR, 2) MONTH(GETDATE()) ,2)+ RIGHT("0"+(DT_WSTR, 2) DAY(GETDATE()) ,2)+ ".XLSX"

d:\Demo\Employee20120623.XLSX

We’ve built this expression up in stages so you could better see how

all the pieces fit together. The expression, as it appears in the Expression Building dialog box on your system, should now look similar to the one shown in Figure 13.

Figure 13: Your final expression in the Expression Builder dialog box

After you’ve evaluated your expression, click OK to close the ExpressionBuilder dialog box. The Variables window should now show the value of the DestinationFileName variable as the one generated by the expression, as shown in Figure 14.

Figure 14: The new value for the DestinationFileName variable

Your final step is to update the File System task so it uses the DestinationFileName variable. To do this, open the File System Task Editor for the task named Copy to new folder. Change the value of the DestinationVariable property to the DestinationFileName variable, as shown in Figure 15.

Figure 15: Using the DestinationFileName variable

Click OK to close the File System Task Editor.

Then check that the new variable works correctly by running the

package. When you’re finished, open Windows Explorer and verify that the

new file has been created and that it uses the correct name.

System Variables

Each SSIS package includes a large number of system variables that you can use for debugging, error handling, change tracking, and other purposes. Figure 16 shows a list of system variables in our package.

Figure 16: System variables in our SSIS package

Like user-defined variables, system variables are scope-specific. In

other words, they are either related to a task, container, or package.

The top red block in Figure 16 are system variables linked to the DataFlow

task, and the bottom block are those related to the package as a whole.

The variable in the blue box is the user-defined variable DestinationFilePath.

Summary

In this article, we created two variables. For the first variable, we assigned a static value, which is used by the variable whenever it is referenced during package execution. For the second variable, we used an expression to define the value. This way, the variable’s value is generated automatically at run time. The article also demonstrated how to use the Copy File function in File System task to copy a file and rename it. We have also looked briefly at system variables. In future articles, I will show you how to use these for error handling and debugging and how to work with user-defined variables when setting up your package’s configuration and deployment. I will also show you how to use variables more extensively.

Working with SSIS Data Types:

Because SSIS types are independent from the other systems, each SSIS type can map to a variety of types in those systems. For example, SSIS does not include geospatial data types such as those you find in SQL Server. Instead, SSIS uses an image type that is specifically mapped to the geospatial types. Yet the image type is not limited to those geospatial types. It is also mapped to other data types in the various systems.

The data types supported by SSIS can be divided into the following categories:

- Numeric: Types that support numeric values formatted as currencies, decimals, and signed and unsigned integers. SSIS supports more numeric types than any other kind.

- String: Types that support ANSI and Unicode character strings.

- Date/Time: Types that support date values, time values, or both in various formats.

- Binary: Types that support binary and image values.

- Boolean: A type to handle Boolean values.

- Identifier: A type to handle globally unique identifiers (GUIDs).

USE AdventureWorks2012;GOIF OBJECT_ID('dbo.AWProducts') IS NOT NULLDROP TABLE dbo.AWProducts;GOCREATE TABLE dbo.AWProducts(

NewProdID NVARCHAR(40) NOT NULL PRIMARY KEY,

OldProdID INT NOT NULL,

ProdName NVARCHAR(50) NOT NULL,

MakeFlag BIT NOT NULL,

ReorderPoint SMALLINT NOT NULL,

ProdPrice MONEY NOT NULL,

Weight DECIMAL(8,2) NULL,

ProductLine NCHAR(2) NULL,

SellStartDate DATETIME NOT NULL,

EndDate DATE NULL,

OldProdGUID UNIQUEIDENTIFIER NOT NULL

);

The package I use to demonstrate the SSIS types is very basic and includes only the following components: NewProdID NVARCHAR(40) NOT NULL PRIMARY KEY,